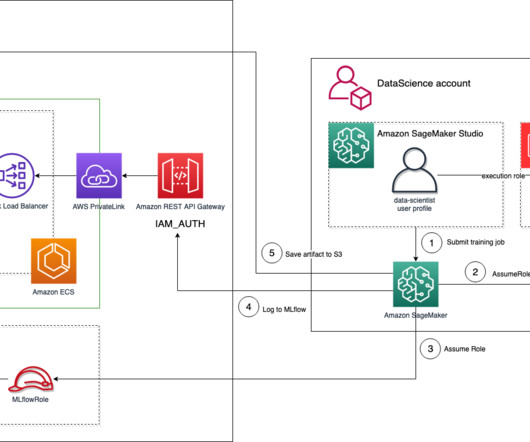

Use AWS PrivateLink to set up private access to Amazon Bedrock

AWS Machine Learning

OCTOBER 30, 2023

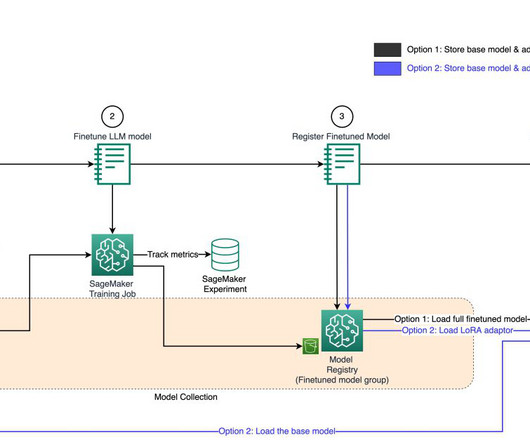

It allows developers to build and scale generative AI applications using FMs through an API, without managing infrastructure. Customers are building innovative generative AI applications using Amazon Bedrock APIs using their own proprietary data.

Let's personalize your content