Build an image search engine with Amazon Kendra and Amazon Rekognition

AWS Machine Learning

MAY 5, 2023

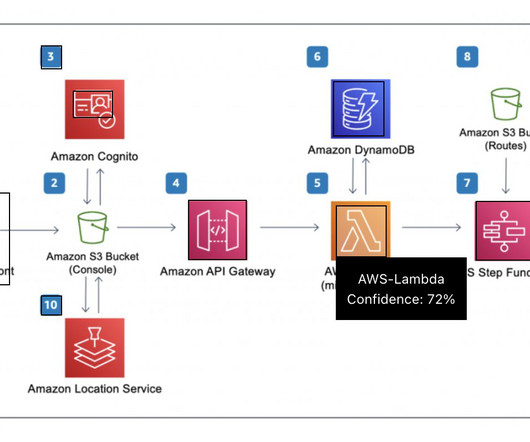

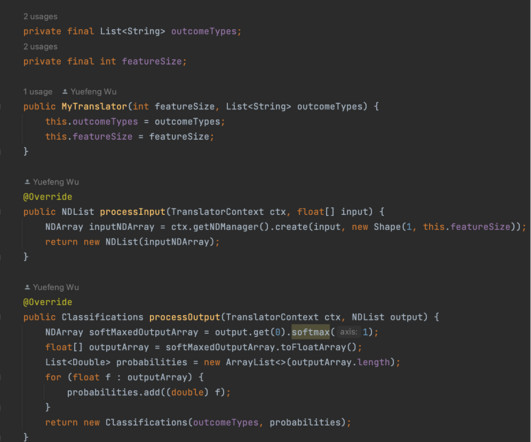

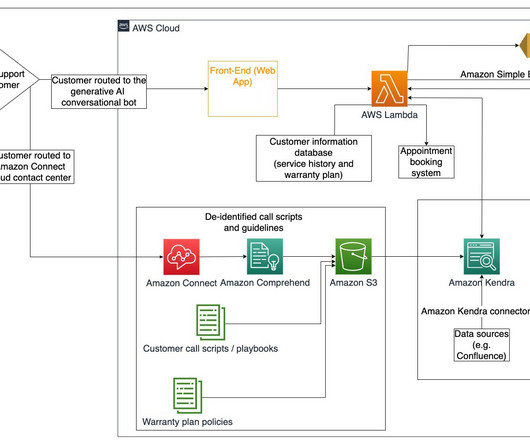

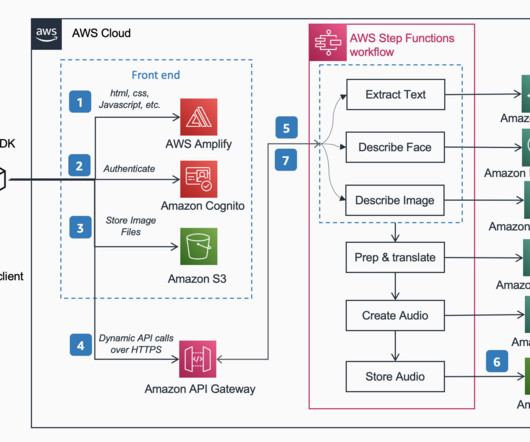

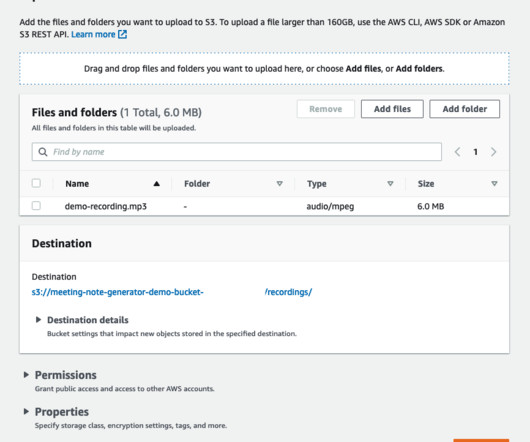

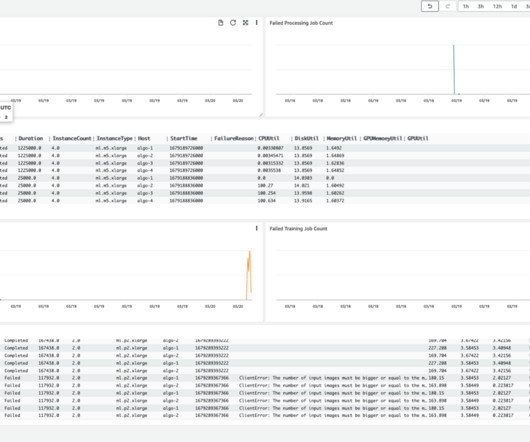

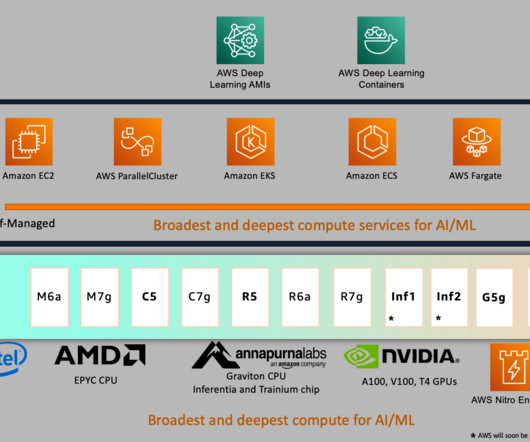

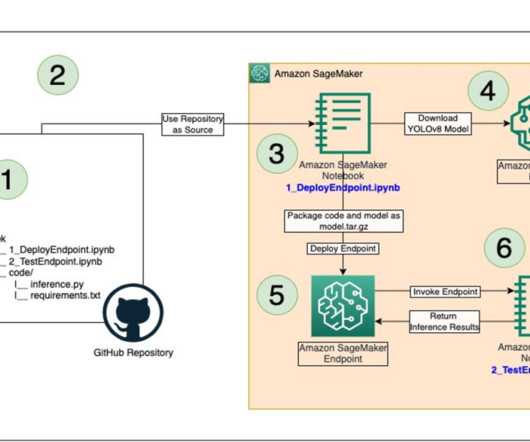

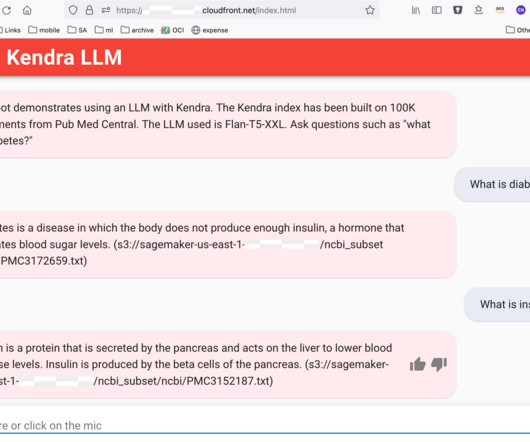

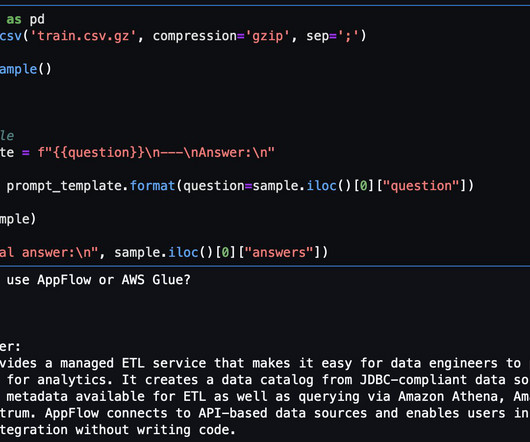

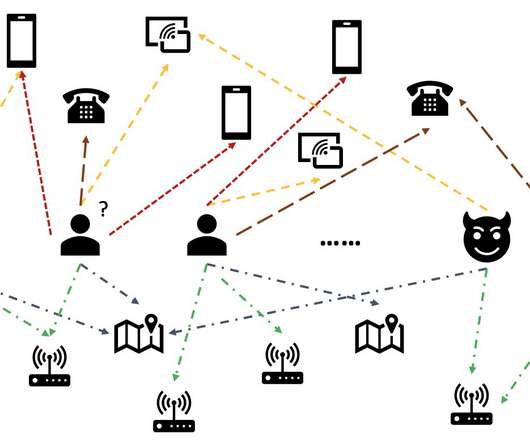

To address the problems associated with complex searches, this post describes in detail how you can achieve a search engine that is capable of searching for complex images by integrating Amazon Kendra and Amazon Rekognition. A Python script is used to aid in the process of uploading the datasets and generating the manifest file.

Let's personalize your content