Run PyTorch Lightning and native PyTorch DDP on Amazon SageMaker Training, featuring Amazon Search

AWS Machine Learning

AUGUST 18, 2022

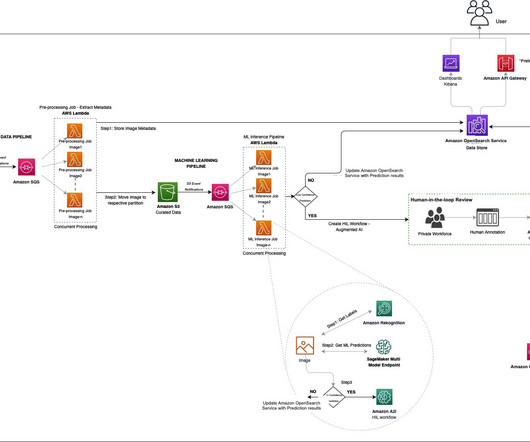

Amazon SageMaker is a fully-managed service for ML, and SageMaker model training is an optimized compute environment for high-performance training at scale. SageMaker model training offers a remote training experience with a seamless control plane to easily train and reproduce ML models at high performance and low cost.

Let's personalize your content