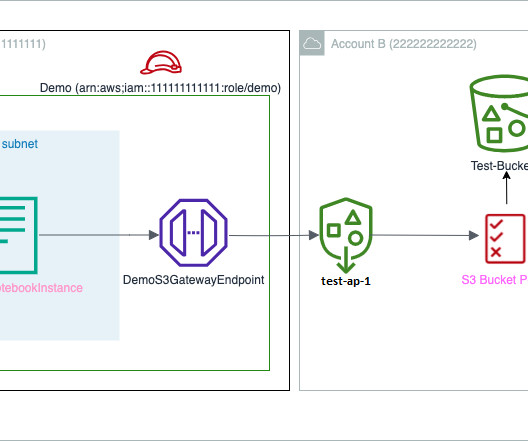

Set up cross-account Amazon S3 access for Amazon SageMaker notebooks in VPC-only mode using Amazon S3 Access Points

AWS Machine Learning

MARCH 13, 2024

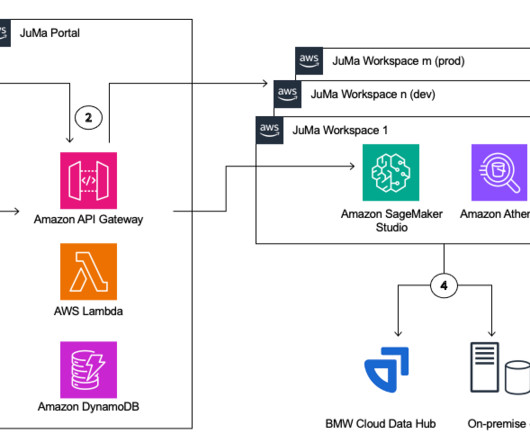

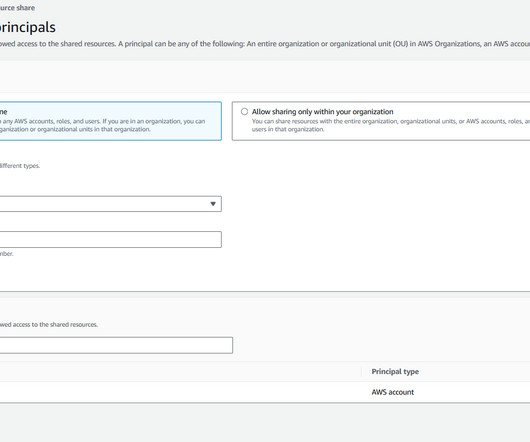

To develop models for such use cases, data scientists need access to various datasets like credit decision engines, customer transactions, risk appetite, and stress testing. Amazon S3 Access Points simplify managing and securing data access at scale for applications using shared datasets on Amazon S3.

Let's personalize your content