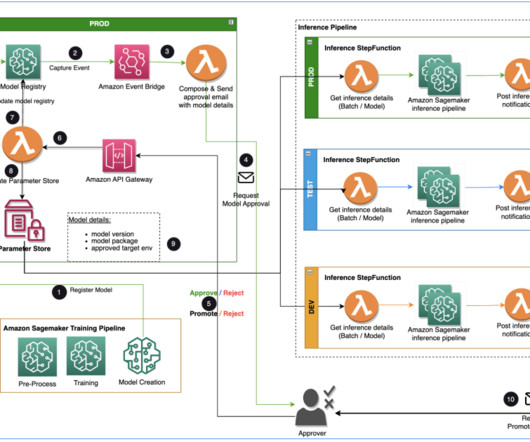

Build an Amazon SageMaker Model Registry approval and promotion workflow with human intervention

AWS Machine Learning

JANUARY 10, 2024

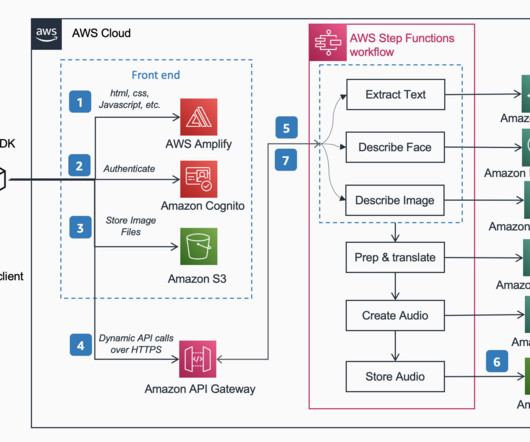

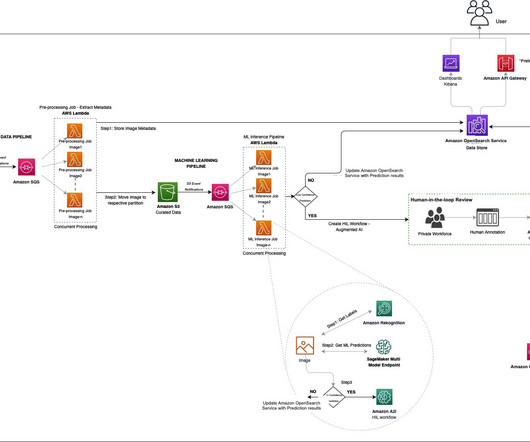

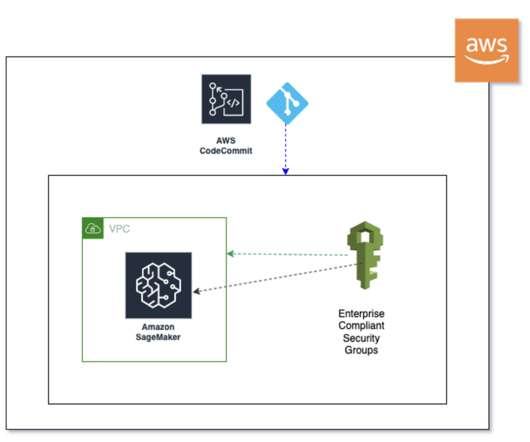

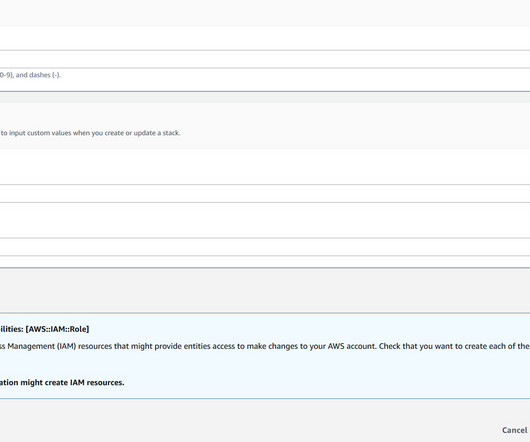

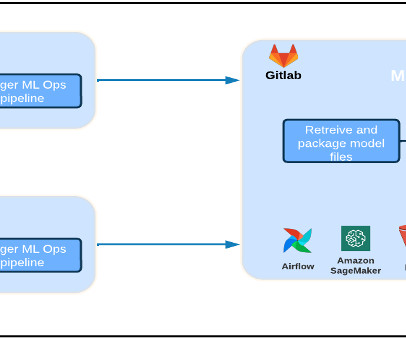

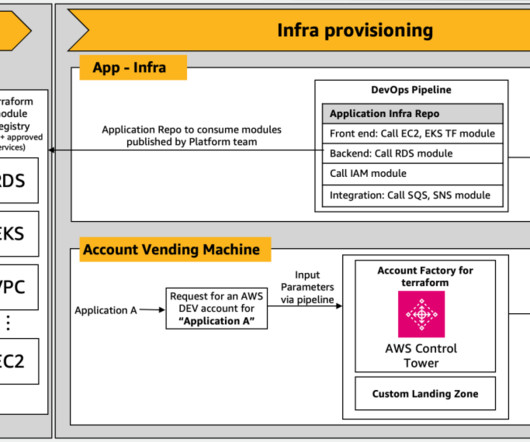

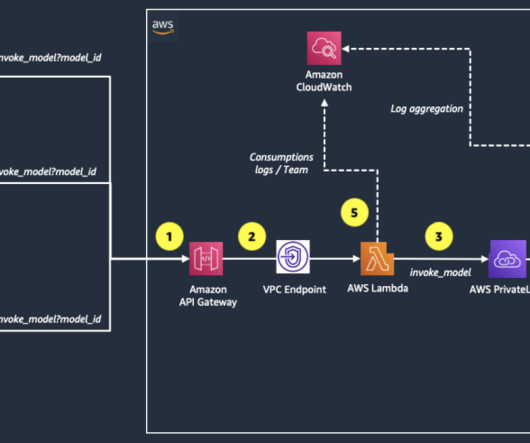

The solution uses AWS Lambda , Amazon API Gateway , Amazon EventBridge , and SageMaker to automate the workflow with human approval intervention in the middle. EventBridge monitors status change events to automatically take actions with simple rules. API Gateway invokes a Lambda function to initiate model updates.

Let's personalize your content