Integrate SaaS platforms with Amazon SageMaker to enable ML-powered applications

AWS Machine Learning

JULY 6, 2023

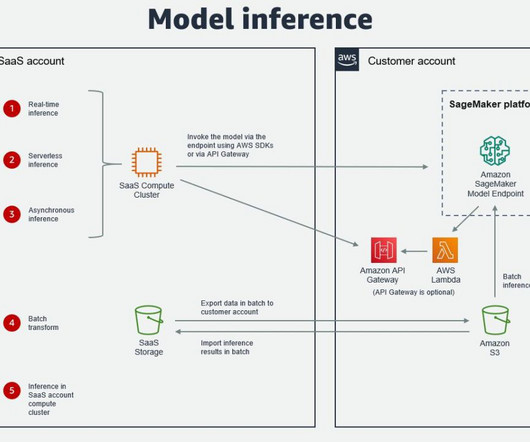

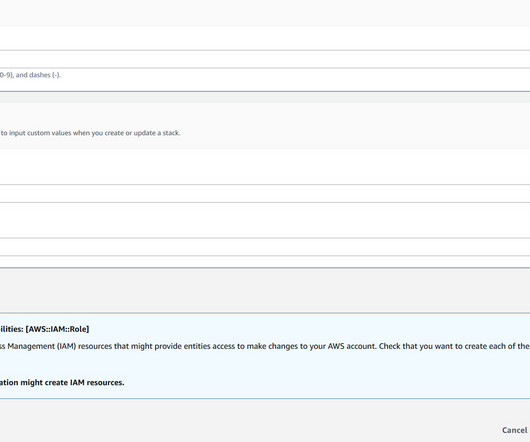

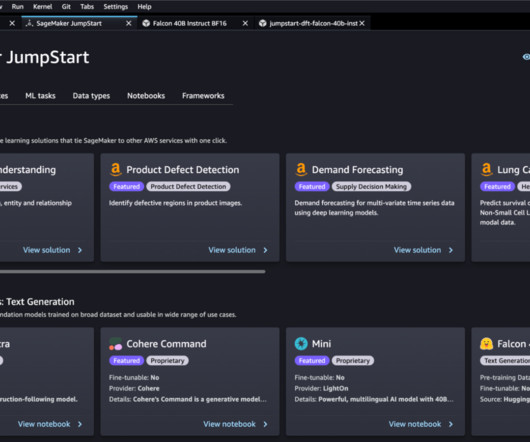

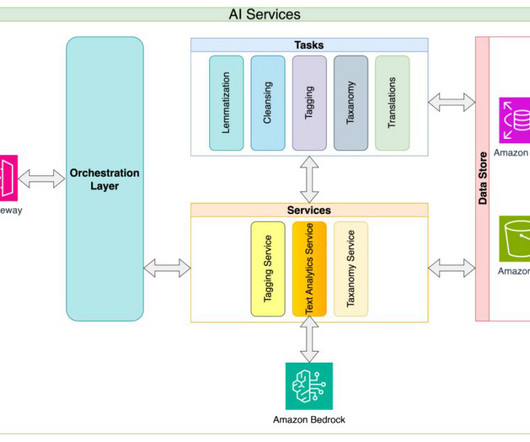

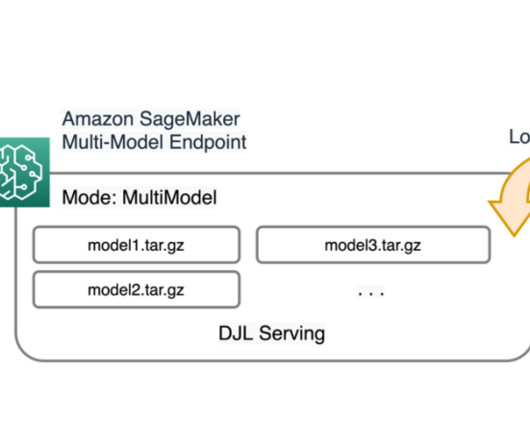

A number of AWS independent software vendor (ISV) partners have already built integrations for users of their software as a service (SaaS) platforms to utilize SageMaker and its various features, including training, deployment, and the model registry.

Let's personalize your content