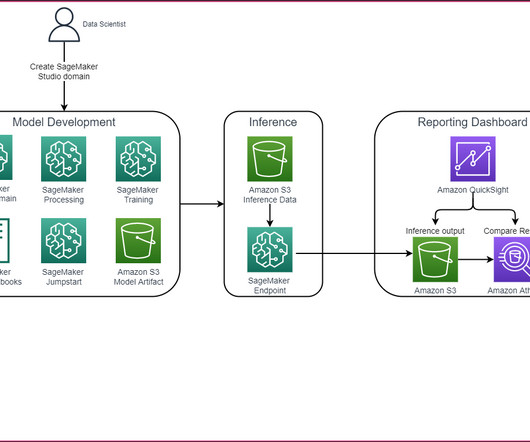

How LotteON built a personalized recommendation system using Amazon SageMaker and MLOps

AWS Machine Learning

MAY 16, 2024

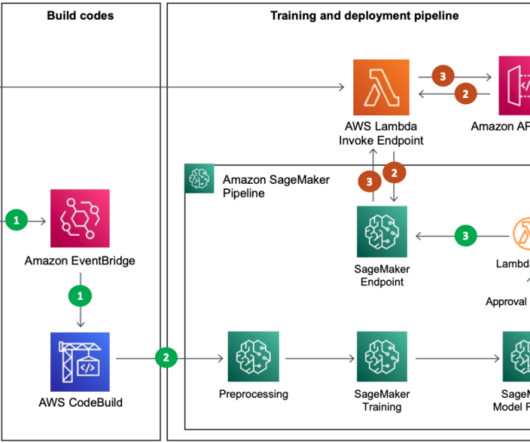

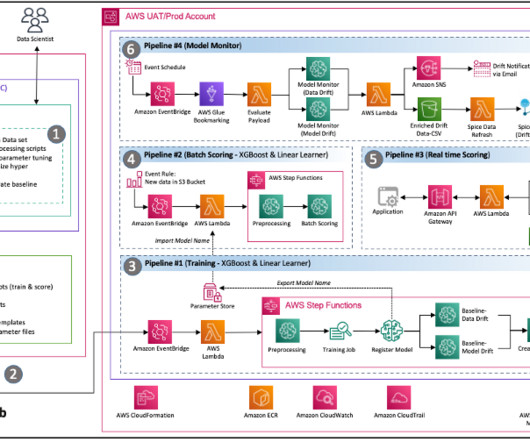

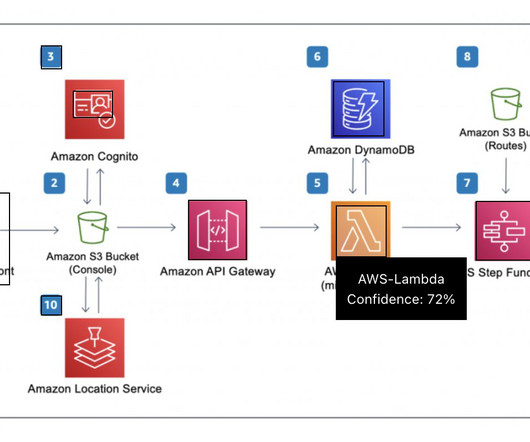

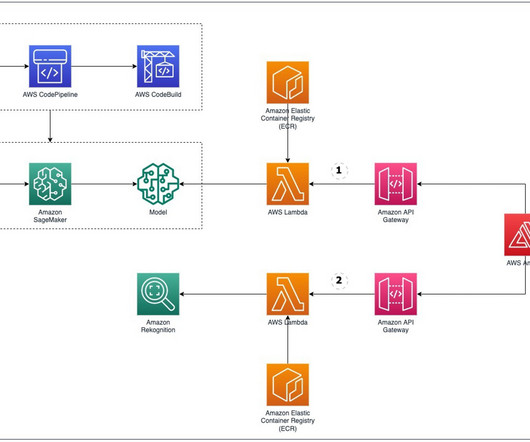

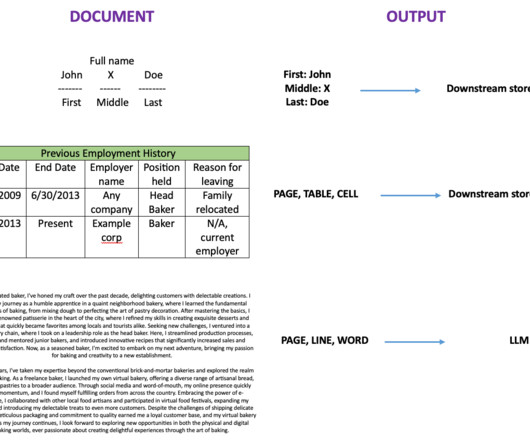

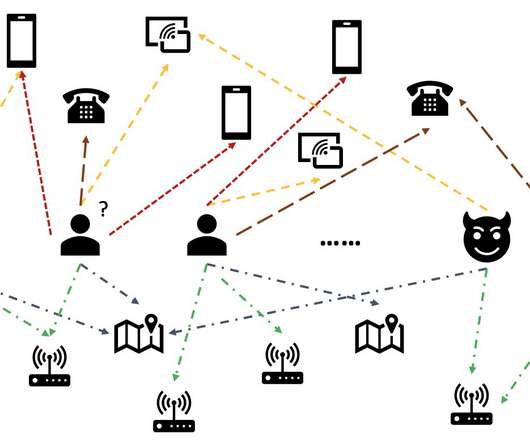

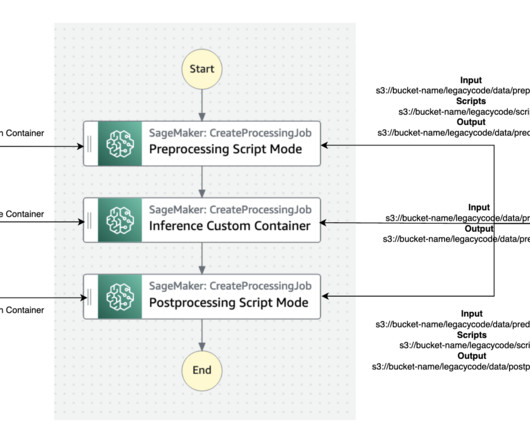

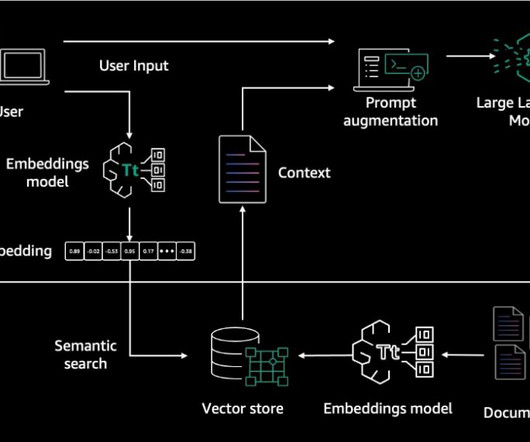

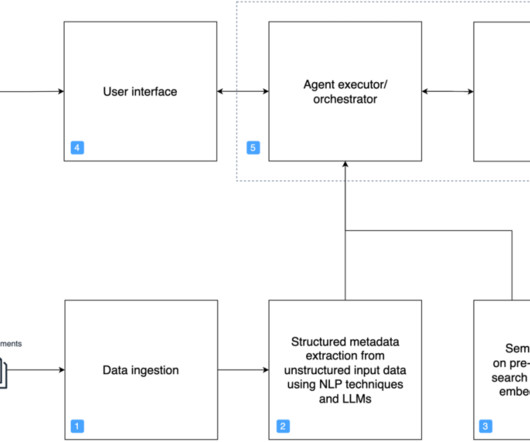

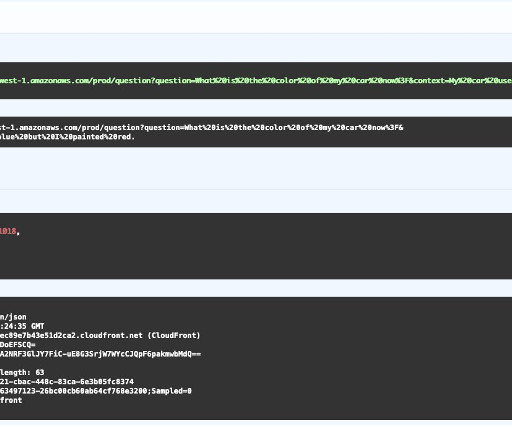

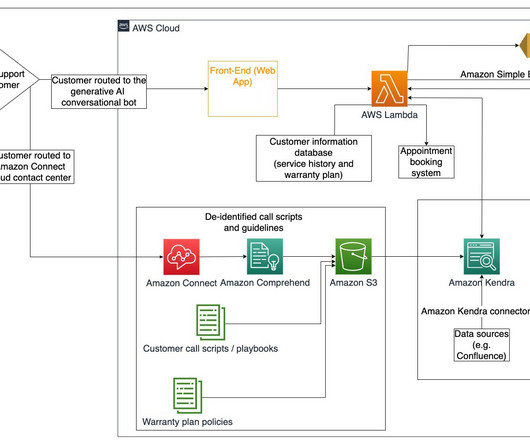

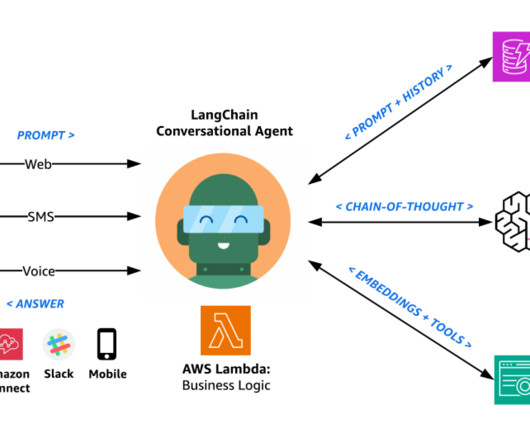

The main AWS services used are SageMaker, Amazon EMR , AWS CodeBuild , Amazon Simple Storage Service (Amazon S3), Amazon EventBridge , AWS Lambda , and Amazon API Gateway. Real-time recommendation inference The inference phase consists of the following steps: The client application makes an inference request to the API gateway.

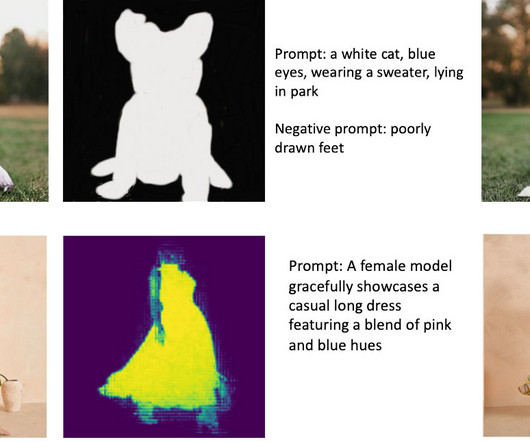

Let's personalize your content