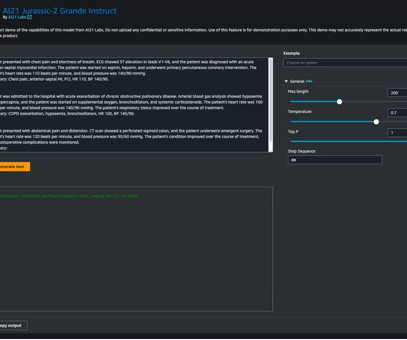

Exploring summarization options for Healthcare with Amazon SageMaker

AWS Machine Learning

AUGUST 1, 2023

In today’s rapidly evolving healthcare landscape, doctors are faced with vast amounts of clinical data from various sources, such as caregiver notes, electronic health records, and imaging reports. In a healthcare setting, this would mean giving the model some data including phrases and terminology pertaining specifically to patient care.

Let's personalize your content