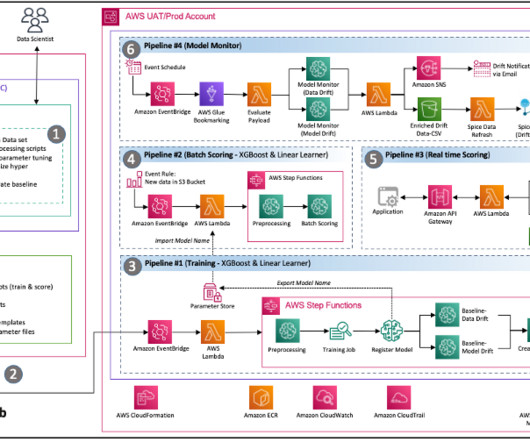

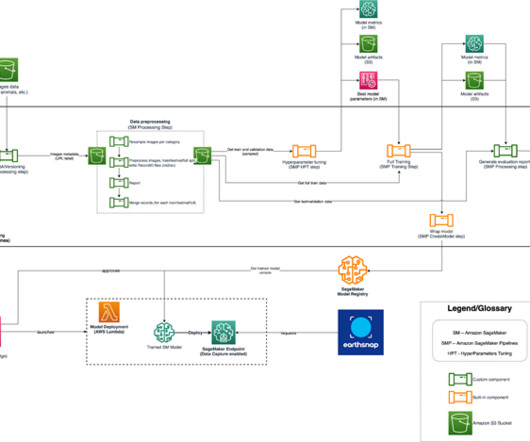

Modernizing data science lifecycle management with AWS and Wipro

AWS Machine Learning

JANUARY 5, 2024

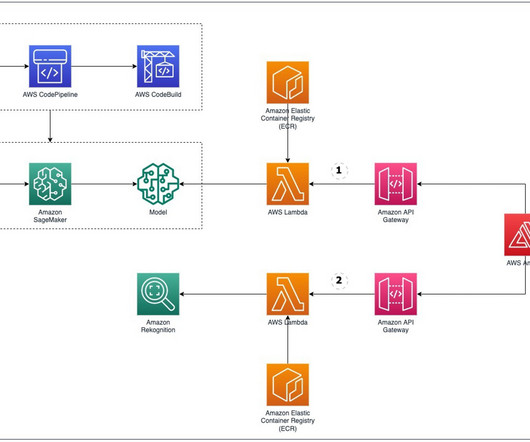

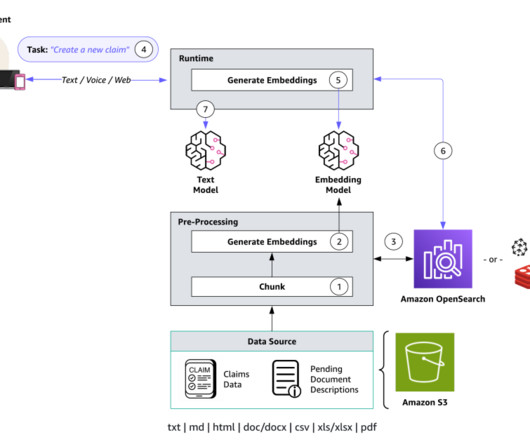

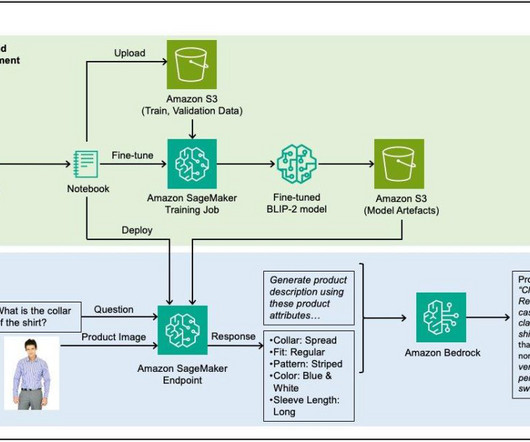

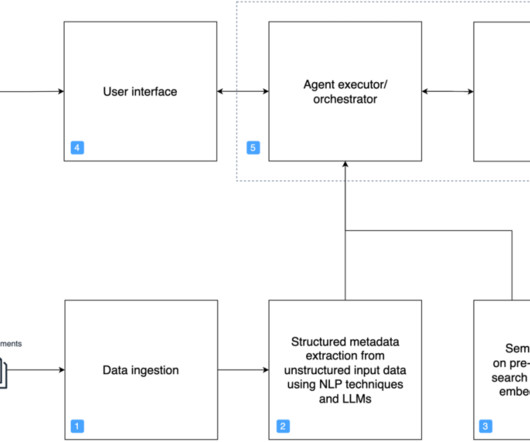

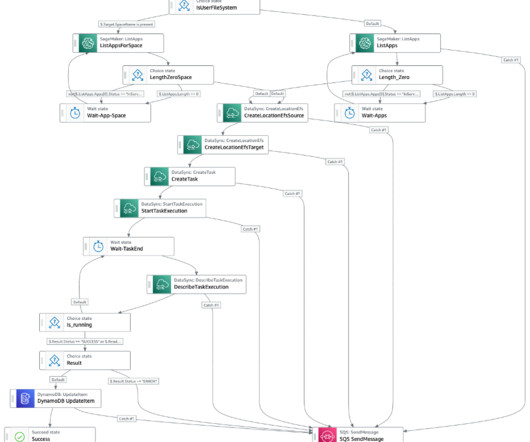

Many organizations have been using a combination of on-premises and open source data science solutions to create and manage machine learning (ML) models. Data science and DevOps teams may face challenges managing these isolated tool stacks and systems. Wipro is an AWS Premier Tier Services Partner and Managed Service Provider (MSP).

Let's personalize your content