Accelerate NLP inference with ONNX Runtime on AWS Graviton processors

AWS Machine Learning

MAY 15, 2024

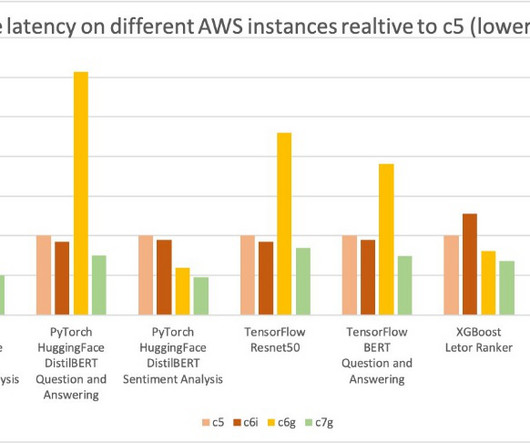

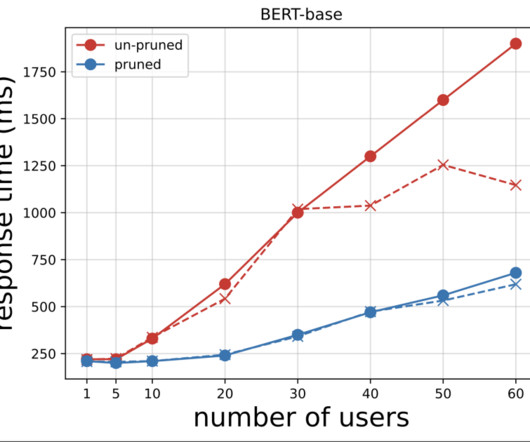

We also demonstrate the resulting speedup through benchmarking. Benchmark setup We used an AWS Graviton3-based c7g.4xl 1014-aws kernel) The ONNX Runtime repo provides inference benchmarking scripts for transformers-based language models. The scripts support a wide range of models, frameworks, and formats.

Let's personalize your content