Speed ML development using SageMaker Feature Store and Apache Iceberg offline store compaction

AWS Machine Learning

DECEMBER 21, 2022

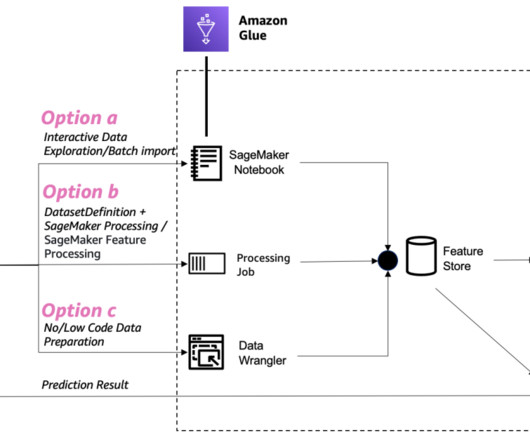

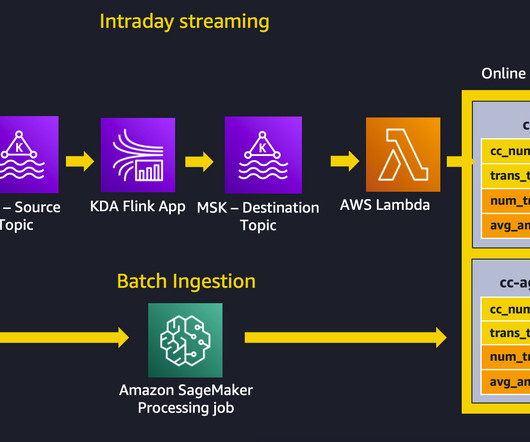

The offline store data is stored in an Amazon Simple Storage Service (Amazon S3) bucket in your AWS account. A new optional parameter TableFormat can be set either interactively using Amazon SageMaker Studio or through code using the API or the SDK. put_record API to ingest individual records or to handle streaming sources.

Let's personalize your content