Modernizing data science lifecycle management with AWS and Wipro

AWS Machine Learning

JANUARY 5, 2024

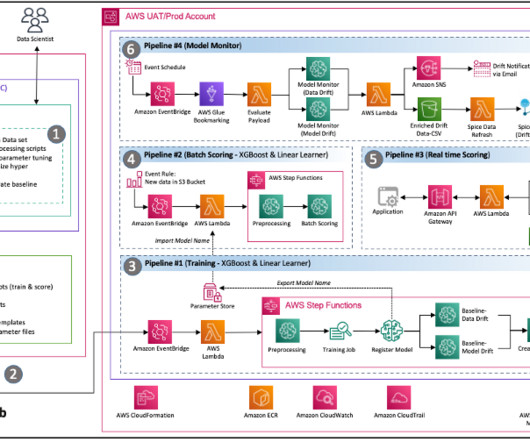

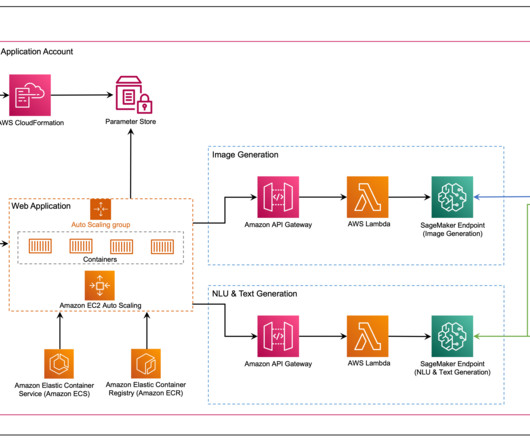

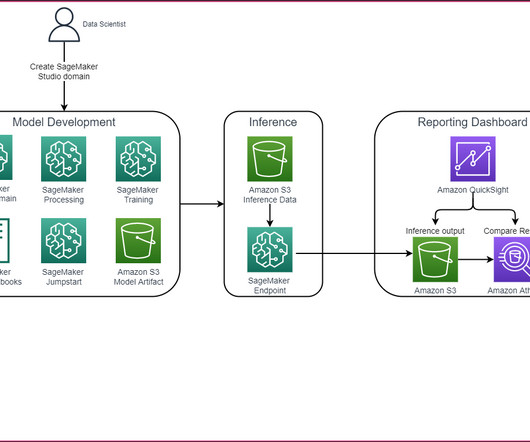

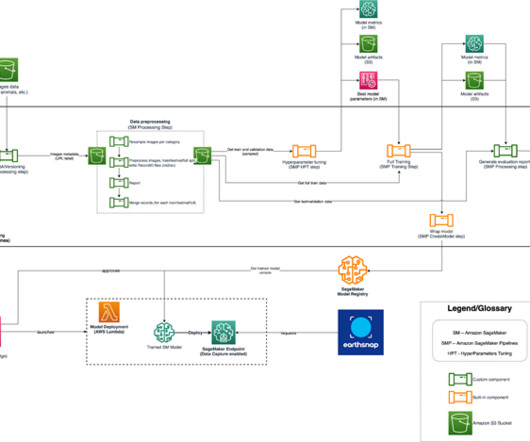

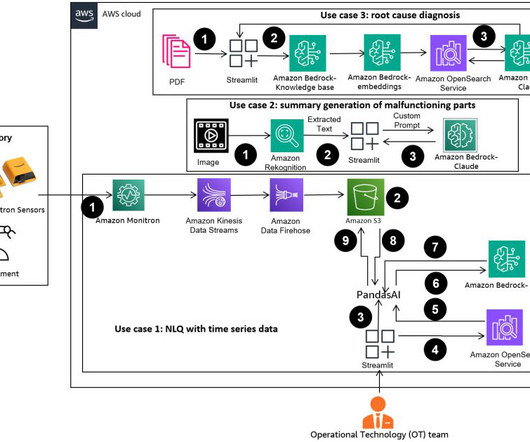

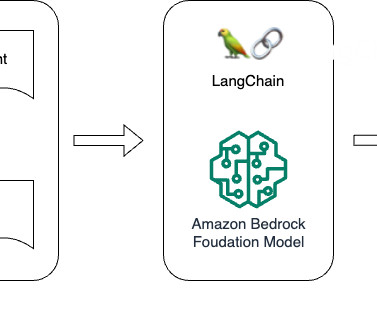

By the end of the consulting engagement, the team had implemented the following architecture that effectively addressed the core requirements of the customer team, including: Code Sharing – SageMaker notebooks enable data scientists to experiment and share code with other team members.

Let's personalize your content