Build well-architected IDP solutions with a custom lens – Part 4: Performance efficiency

AWS Machine Learning

NOVEMBER 22, 2023

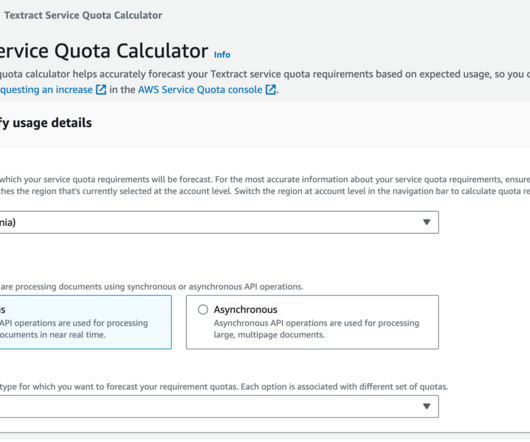

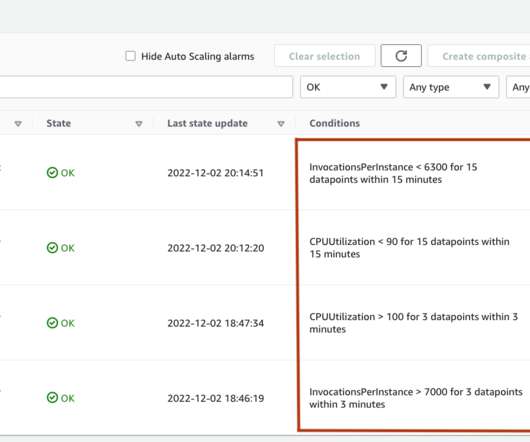

The AWS Well-Architected Framework provides a systematic way for organizations to learn operational and architectural best practices for designing and operating reliable, secure, efficient, cost-effective, and sustainable workloads in the cloud. This helps you avoid throttling limits on API calls due to polling the Get* APIs.

Let's personalize your content