Knowledge Bases for Amazon Bedrock now supports hybrid search

AWS Machine Learning

MARCH 1, 2024

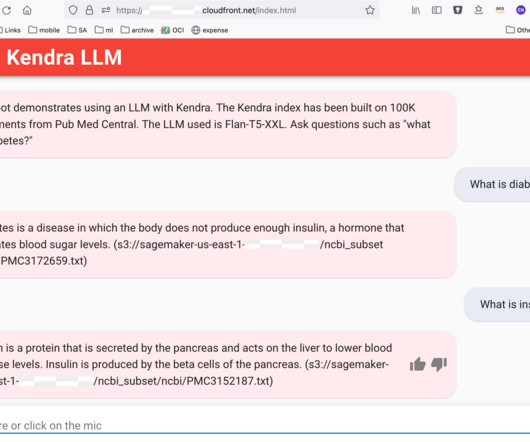

For example, if you have want to build a chatbot for an ecommerce website to handle customer queries such as the return policy or details of the product, using hybrid search will be most suitable. Contextual-based chatbots – Conversations can rapidly change direction and cover unpredictable topics.

Let's personalize your content