Build a cross-account MLOps workflow using the Amazon SageMaker model registry

AWS Machine Learning

NOVEMBER 16, 2022

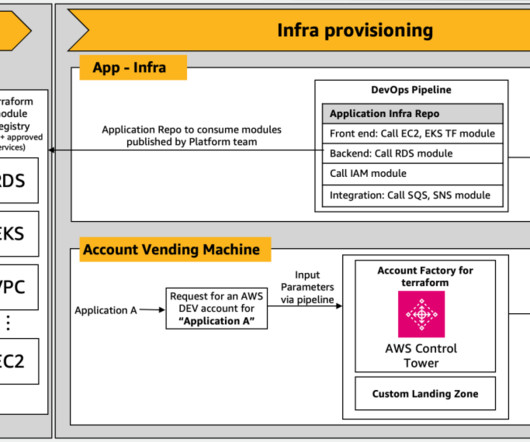

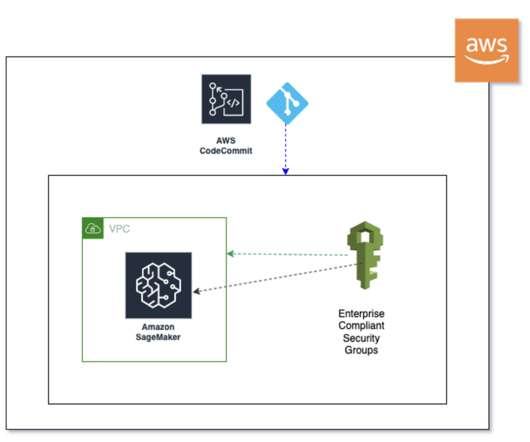

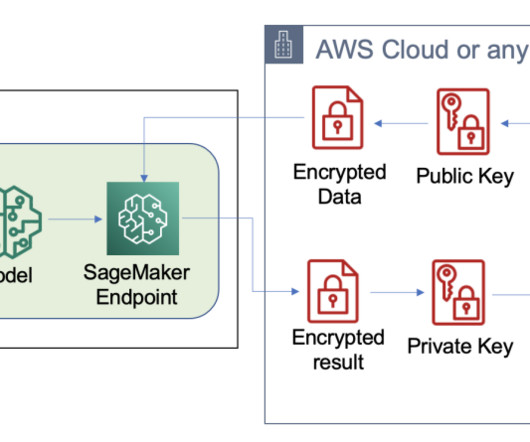

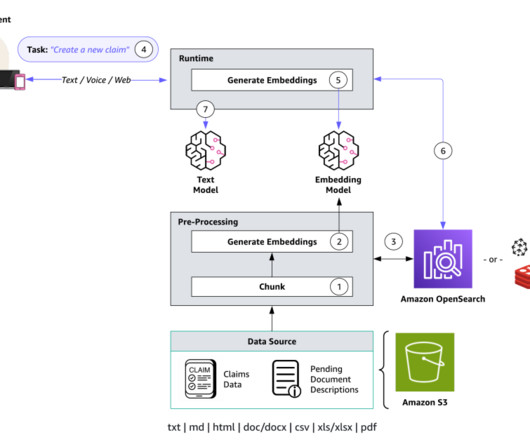

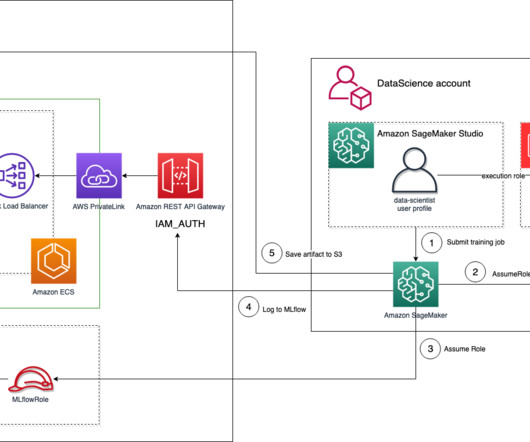

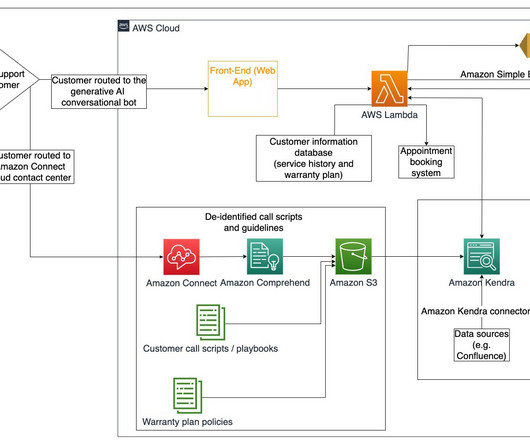

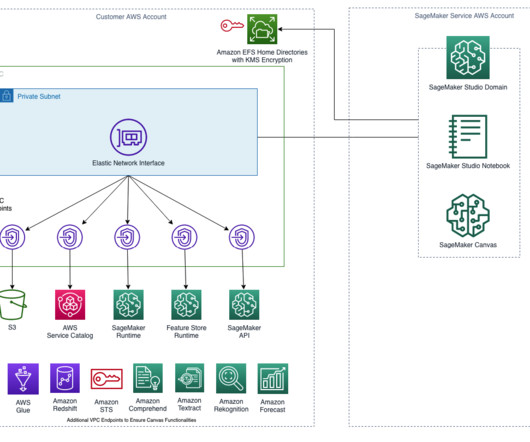

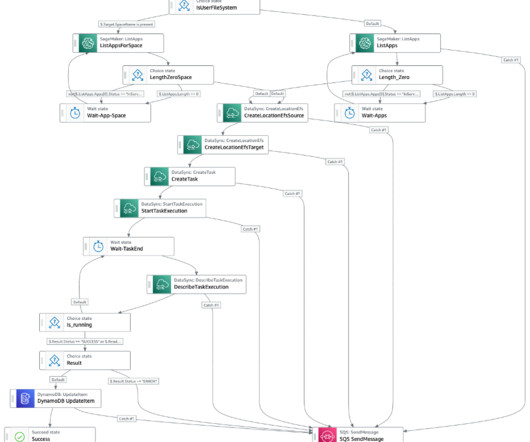

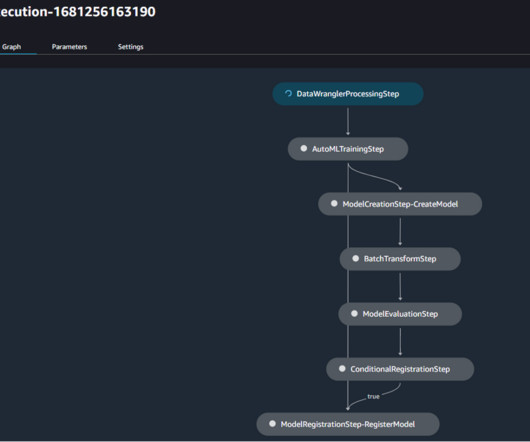

When designing production CI/CD pipelines, AWS recommends leveraging multiple accounts to isolate resources, contain security threats and simplify billing-and data science pipelines are no different. Some things to note in the preceding architecture: Accounts follow a principle of least privilege to follow security best practices.

Let's personalize your content