Build a multilingual automatic translation pipeline with Amazon Translate Active Custom Translation

AWS Machine Learning

JUNE 15, 2023

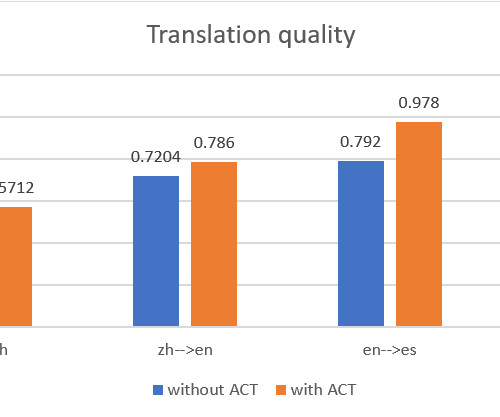

We demonstrate how to use the AWS Management Console and Amazon Translate public API to deliver automatic machine batch translation, and analyze the translations between two language pairs: English and Chinese, and English and Spanish. In this post, we present a solution that D2L.ai

Let's personalize your content