How Amp on Amazon used data to increase customer engagement, Part 1: Building a data analytics platform

AWS Machine Learning

SEPTEMBER 9, 2022

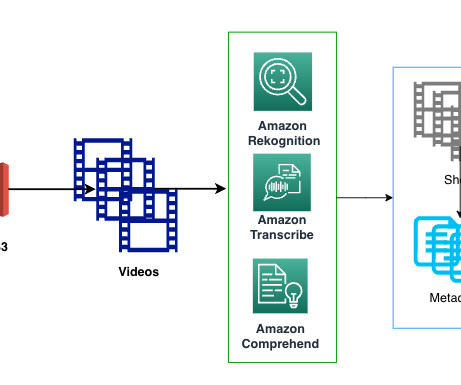

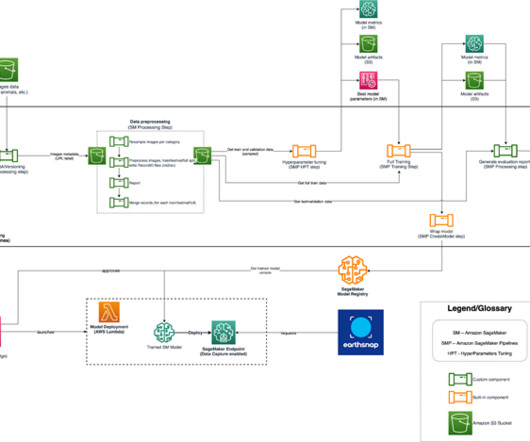

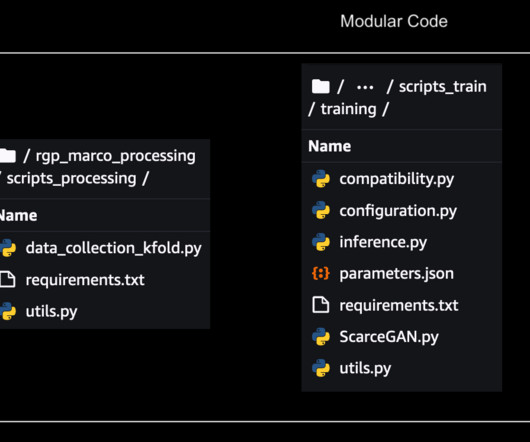

Amp wanted a scalable data and analytics platform to enable easy access to data and perform machine leaning (ML) experiments for live audio transcription, content moderation, feature engineering, and a personal show recommendation service, and to inspect or measure business KPIs and metrics. Business intelligence (BI) and analytics.

Let's personalize your content