Secure Amazon SageMaker Studio presigned URLs Part 3: Multi-account private API access to Studio

AWS Machine Learning

APRIL 11, 2023

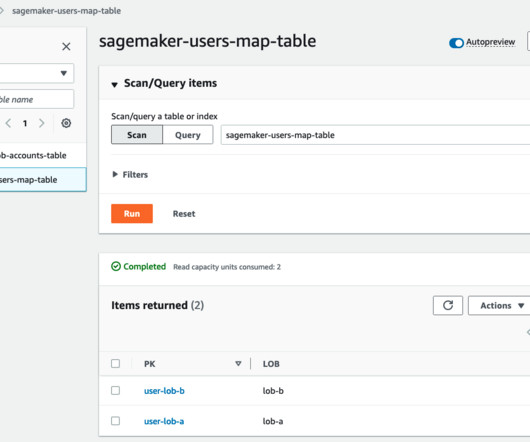

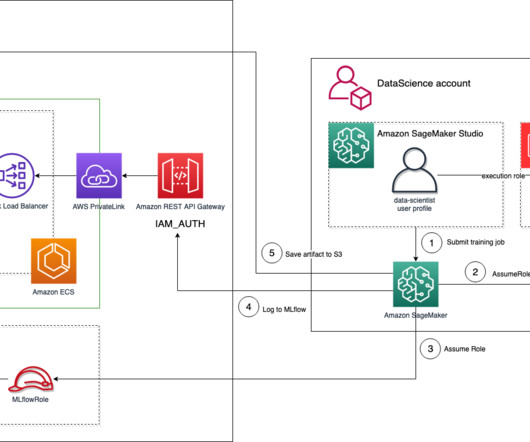

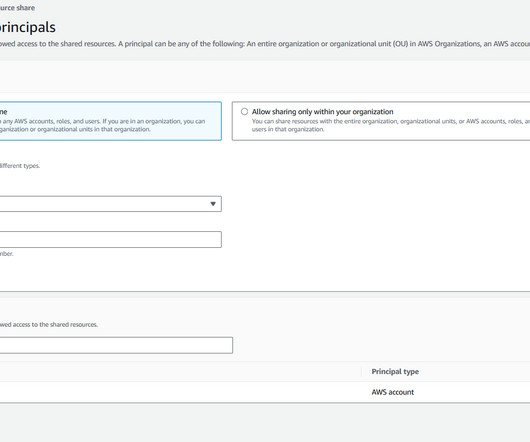

One important aspect of this foundation is to organize their AWS environment following a multi-account strategy. In this post, we show how you can extend that architecture to multiple accounts to support multiple LOBs. In this post, we show how you can extend that architecture to multiple accounts to support multiple LOBs.

Let's personalize your content