Amazon SageMaker Feature Store now supports cross-account sharing, discovery, and access

AWS Machine Learning

FEBRUARY 13, 2024

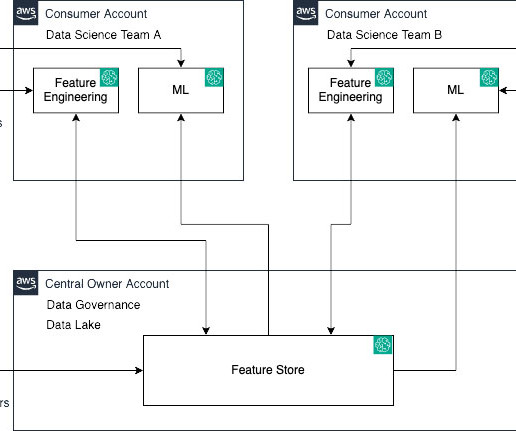

For example, in an application that recommends a music playlist, features could include song ratings, listening duration, and listener demographics. SageMaker Feature Store now allows granular sharing of features across accounts via AWS RAM, enabling collaborative model development with governance.

Let's personalize your content