Accelerate Amazon SageMaker inference with C6i Intel-based Amazon EC2 instances

AWS Machine Learning

MARCH 20, 2023

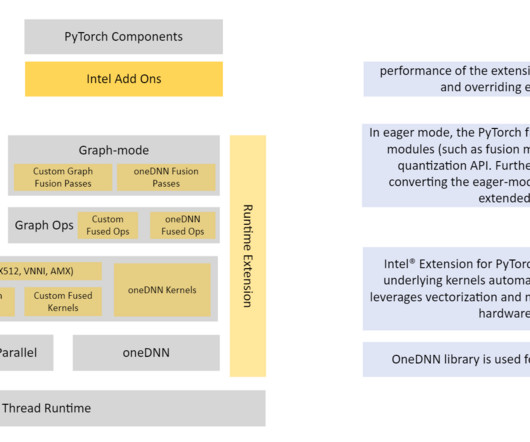

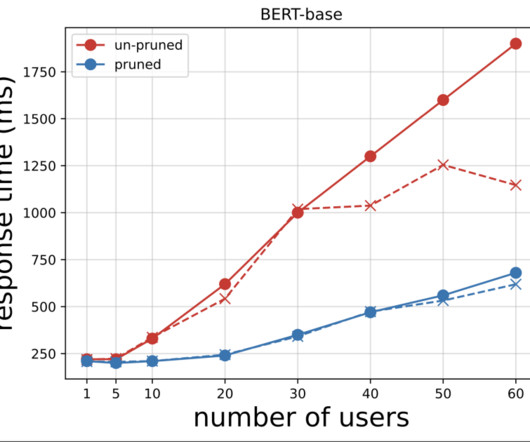

Refer to the appendix for instance details and benchmark data. Use the supplied Python scripts for quantization. Run the provided Python test scripts to invoke the SageMaker endpoint for both INT8 and FP32 versions. Quantizing the model in PyTorch is possible with a few APIs from Intel PyTorch extensions.

Let's personalize your content