Build a multilingual automatic translation pipeline with Amazon Translate Active Custom Translation

AWS Machine Learning

JUNE 15, 2023

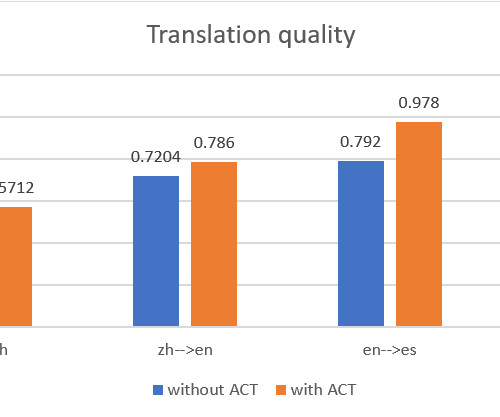

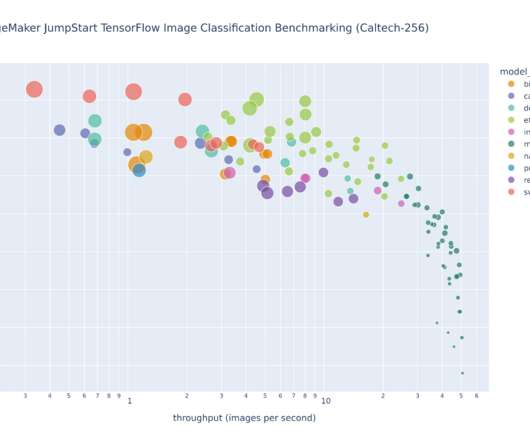

It features interactive Jupyter notebooks with self-contained code in PyTorch, JAX, TensorFlow, and MXNet, as well as real-world examples, exposition figures, and math. ACT allows you to customize translation output on the fly by providing tailored translation examples in the form of parallel data.

Let's personalize your content