Modernizing data science lifecycle management with AWS and Wipro

AWS Machine Learning

JANUARY 5, 2024

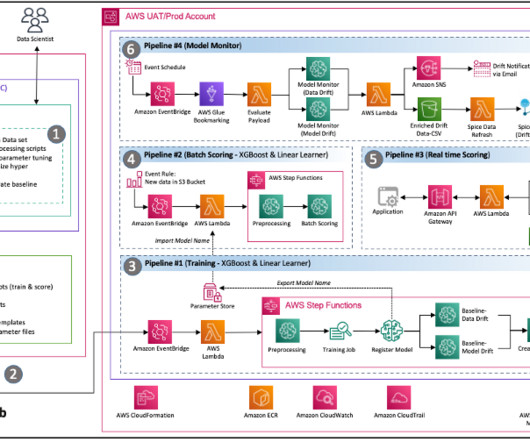

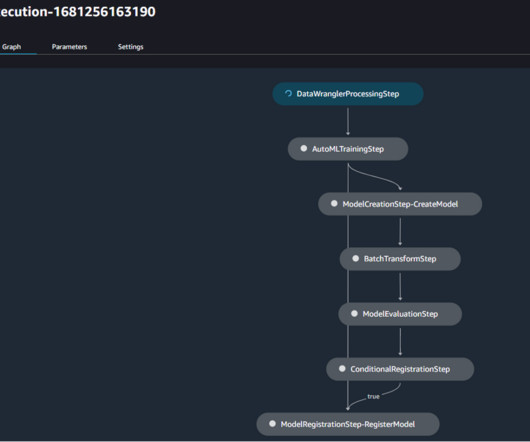

Continuous integration and continuous delivery (CI/CD) pipeline – Using the customer’s GitHub repository enabled code versioning and automated scripts to launch pipeline deployment whenever new versions of the code are committed. Wipro has used the input filter and join functionality of SageMaker batch transformation API.

Let's personalize your content