Philips accelerates development of AI-enabled healthcare solutions with an MLOps platform built on Amazon SageMaker

AWS Machine Learning

NOVEMBER 16, 2023

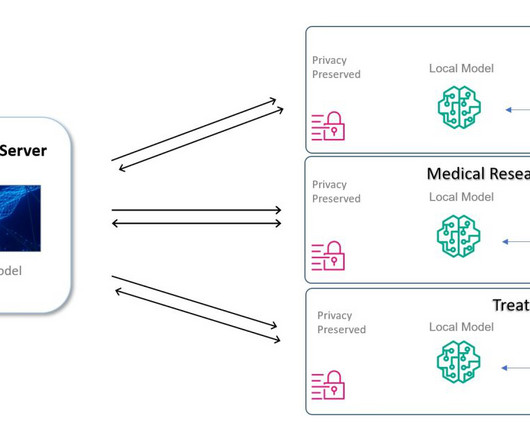

Enable a data science team to manage a family of classic ML models for benchmarking statistics across multiple medical units. Users from several business units were trained and onboarded to the platform, and that number is expected to grow in 2024. Another important metric is the efficiency for data science users.

Let's personalize your content