Speed ML development using SageMaker Feature Store and Apache Iceberg offline store compaction

AWS Machine Learning

DECEMBER 21, 2022

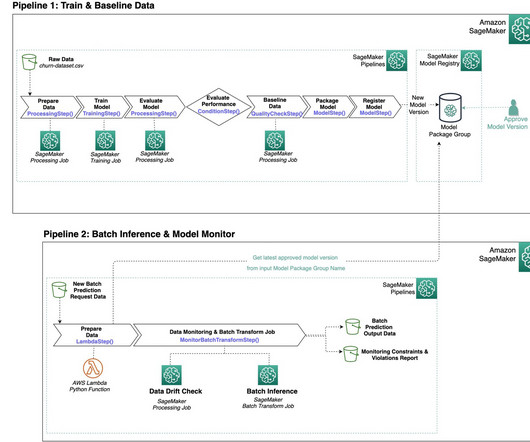

SageMaker Feature Store automatically builds an AWS Glue Data Catalog during feature group creation. Customers can also access offline store data using a Spark runtime and perform big data processing for ML feature analysis and feature engineering use cases. Table formats provide a way to abstract data files as a table.

Let's personalize your content