Improving your LLMs with RLHF on Amazon SageMaker

AWS Machine Learning

SEPTEMBER 22, 2023

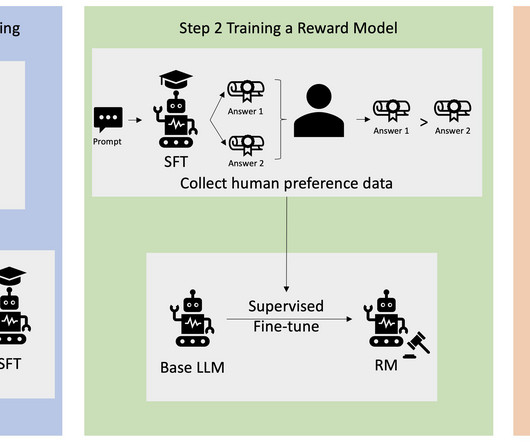

Reinforcement Learning from Human Feedback (RLHF) is recognized as the industry standard technique for ensuring large language models (LLMs) produce content that is truthful, harmless, and helpful. Gone are the days when you need unnatural prompt engineering to get base models, such as GPT-3, to solve your tasks. yaml ppo_hh.py

Let's personalize your content