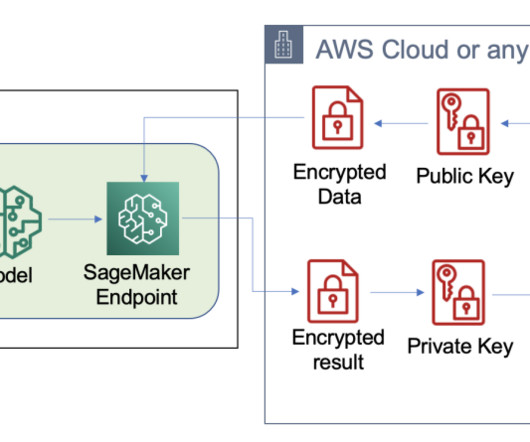

Enable fully homomorphic encryption with Amazon SageMaker endpoints for secure, real-time inferencing

AWS Machine Learning

MARCH 23, 2023

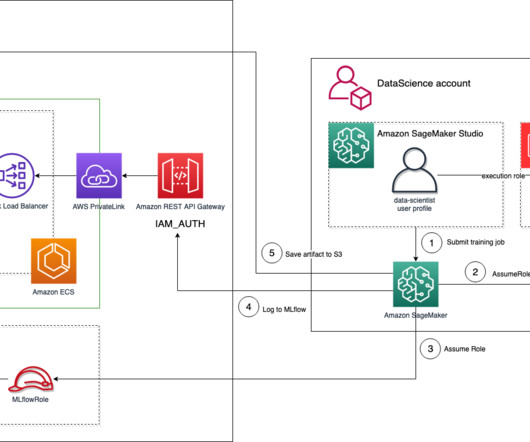

Applications and services can call the deployed endpoint directly or through a deployed serverless Amazon API Gateway architecture. To learn more about real-time endpoint architectural best practices, refer to Creating a machine learning-powered REST API with Amazon API Gateway mapping templates and Amazon SageMaker.

Let's personalize your content