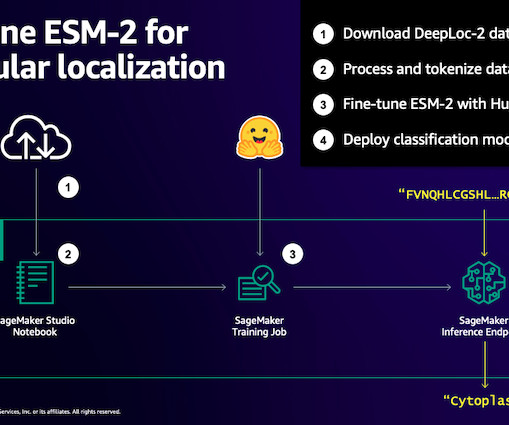

Efficiently fine-tune the ESM-2 protein language model with Amazon SageMaker

AWS Machine Learning

MARCH 6, 2024

This includes large language models (LLMs) pretrained on huge datasets, which can then be adapted for specific tasks, like text summarization or chatbots. In our training script, we subclass the Trainer class from transformers with a WeightedTrainer class that takes class weights into account when calculating cross-entropy loss.

Let's personalize your content