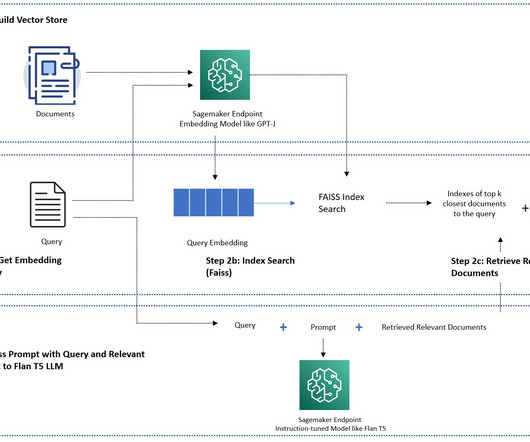

Build knowledge-powered conversational applications using LlamaIndex and Llama 2-Chat

AWS Machine Learning

APRIL 8, 2024

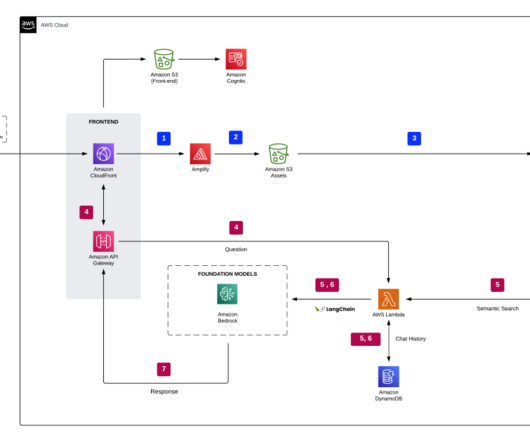

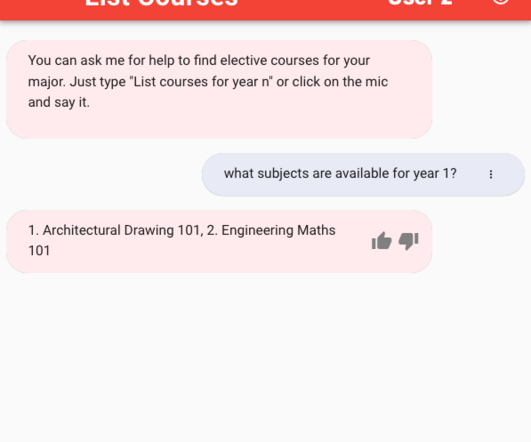

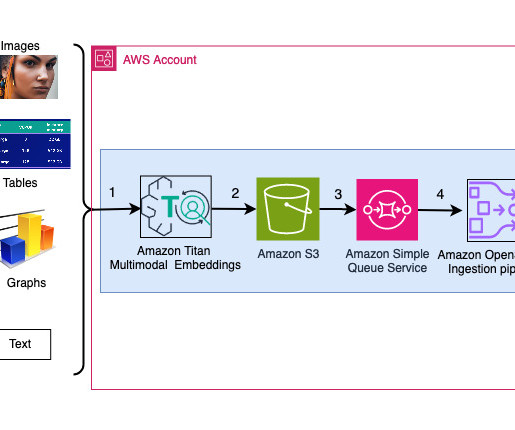

Unlocking accurate and insightful answers from vast amounts of text is an exciting capability enabled by large language models (LLMs). With these state-of-the-art technologies, you can ingest text corpora, index critical knowledge, and generate text that answers users’ questions precisely and clearly.

Let's personalize your content