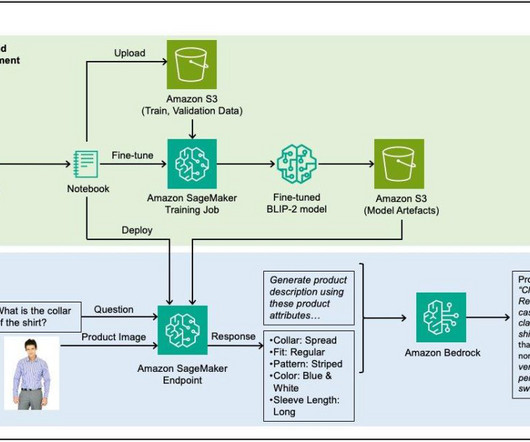

Generating fashion product descriptions by fine-tuning a vision-language model with SageMaker and Amazon Bedrock

AWS Machine Learning

MAY 22, 2024

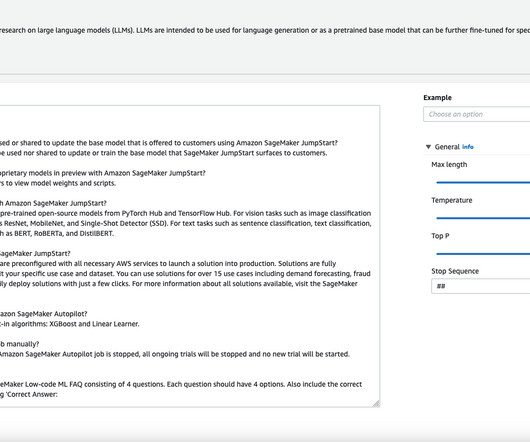

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

Let's personalize your content