Generating value from enterprise data: Best practices for Text2SQL and generative AI

AWS Machine Learning

JANUARY 4, 2024

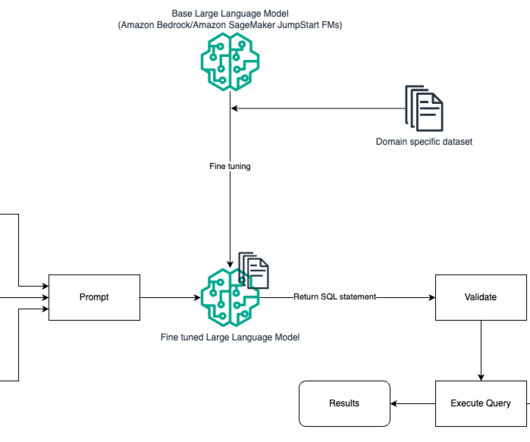

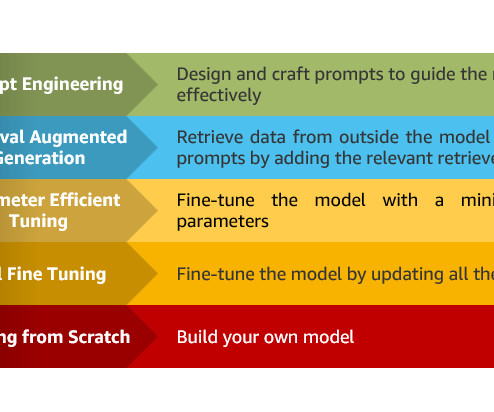

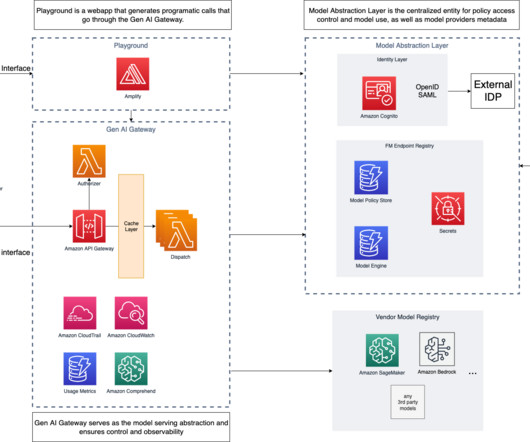

In this post, we provide an introduction to text to SQL (Text2SQL) and explore use cases, challenges, design patterns, and best practices. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) via a single API, enabling to easily build and scale Gen AI applications.

Let's personalize your content