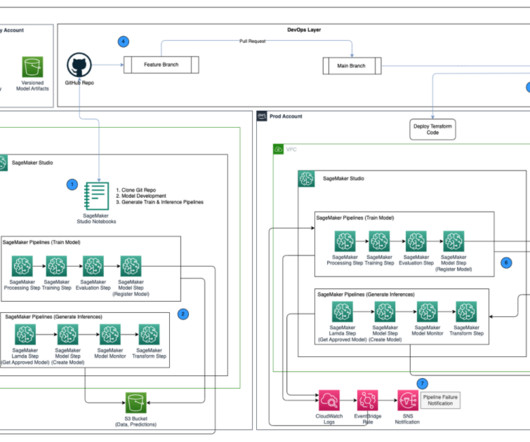

Promote pipelines in a multi-environment setup using Amazon SageMaker Model Registry, HashiCorp Terraform, GitHub, and Jenkins CI/CD

AWS Machine Learning

NOVEMBER 9, 2023

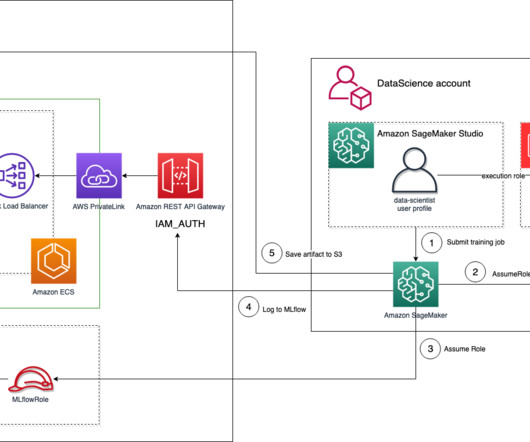

Central model registry – Amazon SageMaker Model Registry is set up in a separate AWS account to track model versions generated across the dev and prod environments. Approve the model in SageMaker Model Registry in the central model registry account. Create a pull request to merge the code into the main branch of the GitHub repository.

Let's personalize your content