This blog is written courtesy of Interactions R&D team.

Improved artificial intelligence (AI) technologies along with the widespread availability of capable devices has led to a recent surge in speech-driven conversational systems that allow users to interact with virtual agents. Strong adoption has provided the impetus to make these conversational systems not only ubiquitous, but also increasingly available to wider markets by providing them in multiple languages. Doing so however, requires the building of multiple language-specific AI components each time. The core AI components behind these conversational spoken language understanding (SLU) systems are automatic speech recognition (ASR), natural language understanding (NLU), and dialog management (DM).

The ASR component takes the input speech signal and provides the text hypothesis that the rest of the downstream components process to ultimately return a response and act on the speaker’s requests. Building a state-of-the-art ASR system is a resource intensive process and the cost and time associated with acquiring all of the resources needed is a barrier to providing services with conversational SLU systems that can cover a large assortment of languages.

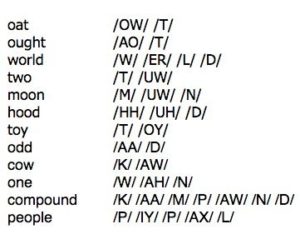

One of those resources relates to the modeling of words as units of sound. Most ASR systems today model words using sub-word units of sound called phonemes. The acoustic realization of phonemes can vary slightly depending on the preceding and following phonemes so context dependent phonemes are typically used. The mapping of sequences of phonemes to words is provided by a pronunciation model and the mappings found in the pronunciation model are derived from a hand-crafted pronunciation dictionary listing words and their pronunciations.

This presents a challenge when expanding conversational SLU services to cover many languages because acquiring good quality pronunciation dictionaries for multiple languages can be costly. Also, attention from an expert with knowledge of the language is needed for both manual tuning of the pronunciation dictionary, and for specifying the linguistic questions used when determining the set of context dependent phoneme states for acoustic modeling.

However, if graphemes (characters) are selected as the sub-word unit for modeling instead of phonemes, we can eliminate the need for pronunciation dictionaries. The mapping from sequences of graphemes to words is trivial and doesn’t require anything more than knowing how to spell the words you want to be able to recognize. This is one way to reduce resource dependencies when rapidly developing ASR for SLU in many new languages.

While graphemes are an attractive choice for their simplicity, depending on the language, it can be more difficult to model words with graphemes instead of phonemes. English has lots of irregular spellings and a low correspondence between graphemes and phonemes. For example, the letter ‘o’ can correspond to many different phonemes and is sometimes just absent from the pronunciation altogether.

However, other languages like Italian, while not perfectly phonemic, have pronunciations that tend to be more easily predicted from the word’s spelling.

It may also be the case that even if graphemes result in a more challenging modeling task for the ASR system, the impact on the downstream SLU prediction may not be so significant, particularly when ASR errors are consistent. If similar performance on the downstream SLU task can be achieved with graphemes instead of phonemes, one obstacle to rapidly deploying conversational SLU in many languages will be alleviated because pronunciation dictionaries and language-specific expertise will not be necessary for developing ASR in those languages.

We have investigated grapheme-based acoustic modeling as part of an SLU system for classifying spoken utterances in the customer care domain with several languages [1]. We found that differences in word accuracy between grapheme-based and phoneme-based ASR tended to be small, if any, and more promisingly, the performance on the downstream spoken utterance classification task was still competitive with graphemes.

While the results with grapheme-based acoustic modeling for spoken utterance classification are encouraging, other challenges remain for rapidly deploying multilingual systems. We are also working on addressing the requirements for large amounts of transcribed, in-domain data needed for acoustic and language modeling, as well as exploring “End-to-End” approaches for classifying spoken utterances directly from the audio signal [2, 3, 4].

[1] Price, R., et al. “Investigating the downstream impact of grapheme-based acoustic modeling on spoken utterance classification.” in 2018 IEEE Spoken Language Technology Workshop (SLT), 2018.

[2] Chen, Y.P., et al. “Spoken language understanding without speech recognition.” in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2018.

[3] Price, R., et al. “Improved end-to-end spoken utterance classification with a self-attention acoustic classifier.” in 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020.

[4] Price, R. “End-To-End Spoken Language Understanding Without Matched Language Speech Model Pretraining Data.” in 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020.