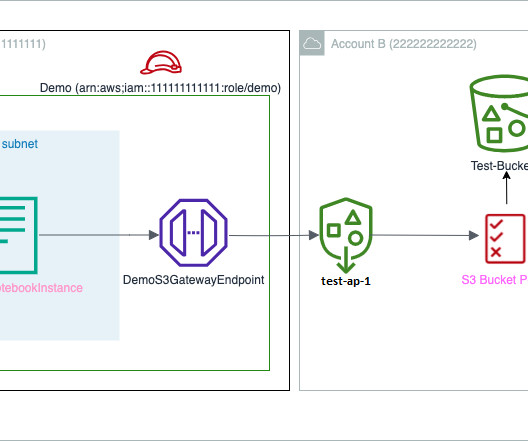

Set up cross-account Amazon S3 access for Amazon SageMaker notebooks in VPC-only mode using Amazon S3 Access Points

AWS Machine Learning

MARCH 13, 2024

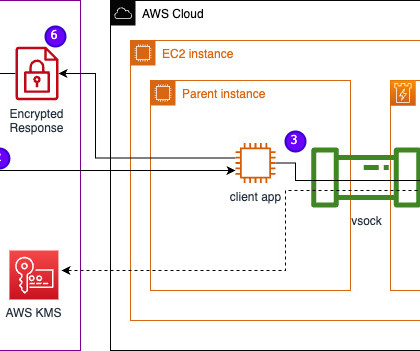

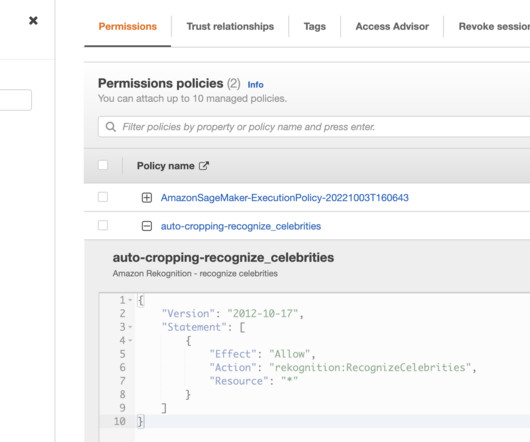

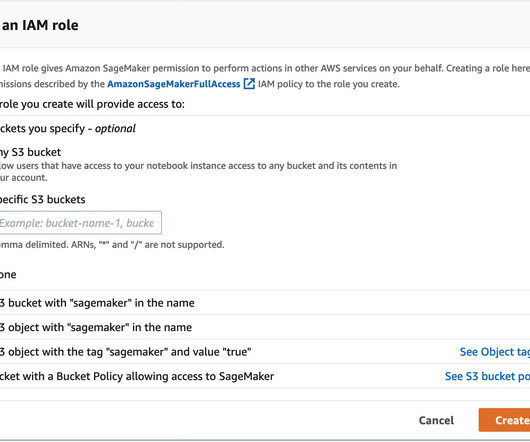

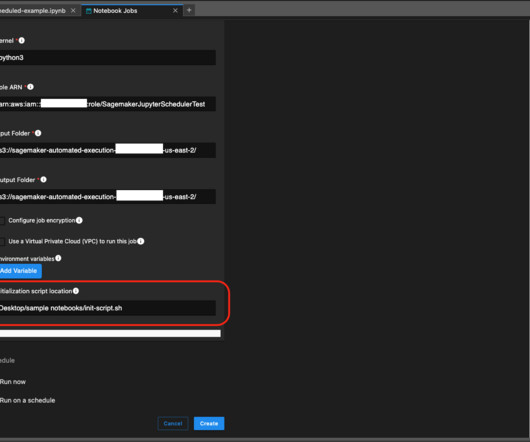

With an increase in use cases and datasets using bucket policy statements, managing cross-account access per application is too complex and long for a bucket policy to accommodate. This post walks through the steps involved in configuring S3 Access Points to enable cross-account access from a SageMaker notebook instance.

Let's personalize your content