Every tech trend has a natural lifespan. It starts on the fringes, builds momentum with early adopters, then moves into the mainstream. Subsequently, you get some start-up successes, then a rush of VC-funded efforts, then an overshoot in expectations, and finally a settling-in to some kind of status quo. Think “P2P”, “big data”, “IoT”, or “blockchain”.

But “AI” is a different animal. It doesn’t follow these rules because its definition is fuzzy. “AI” isn’t a particular technology (like blockchain) or even a particular concept like IoT. This creates a unique challenge for journalists, investors and entrepreneurs.

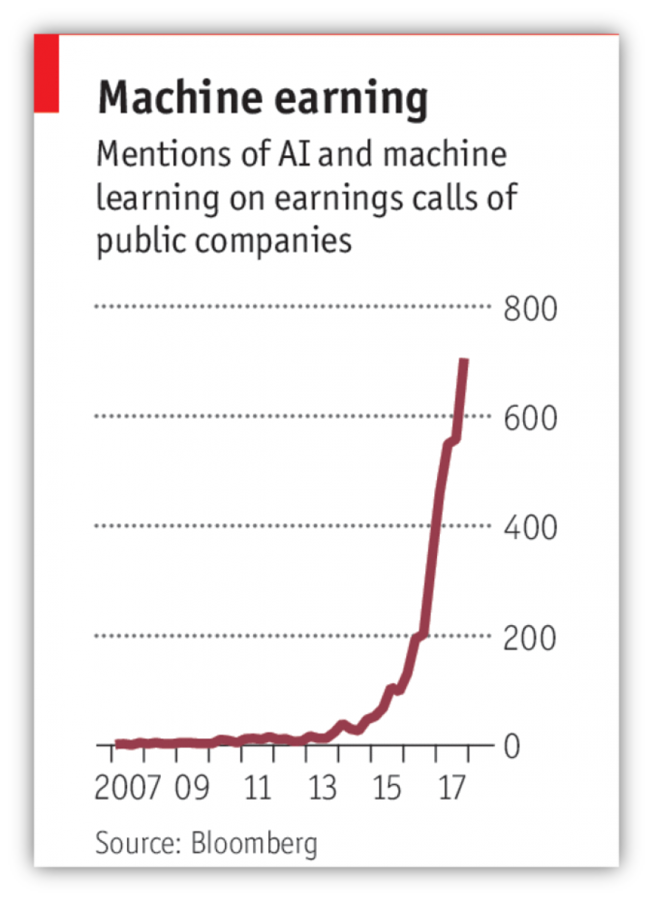

Feverish

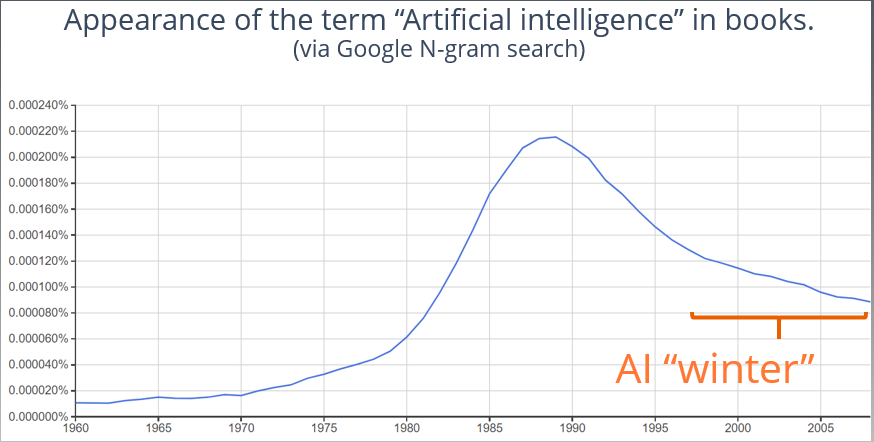

We often talk about concepts in terms of virality. Our world has a bad case of AI fever. Is it here for good? Is this a passing fad? There’s this concept of “AI winter”, referring to the fact that we’ve had a case of this fever before:

Are there any other tech terms where the summer/winter cycle is part of our vocabulary? Kind of a red flag, no? (Hat tip to Paul Kedrosky for n-gram search.)

A number of articles tell us AI winter is already underway. Now, to be fair, that’s like calling a stock market top (you’ll always find someone doing it), and in the internet age you can find a bearish argument for any trend.

But what’s different is no other term has stuck around long enough for multiple swings of the pendulum. Will we look back in 10 years and think our exuberance was as foolish and irrational as it was with the “summer” of the 1980s?

A Porous Border

A Porous Border

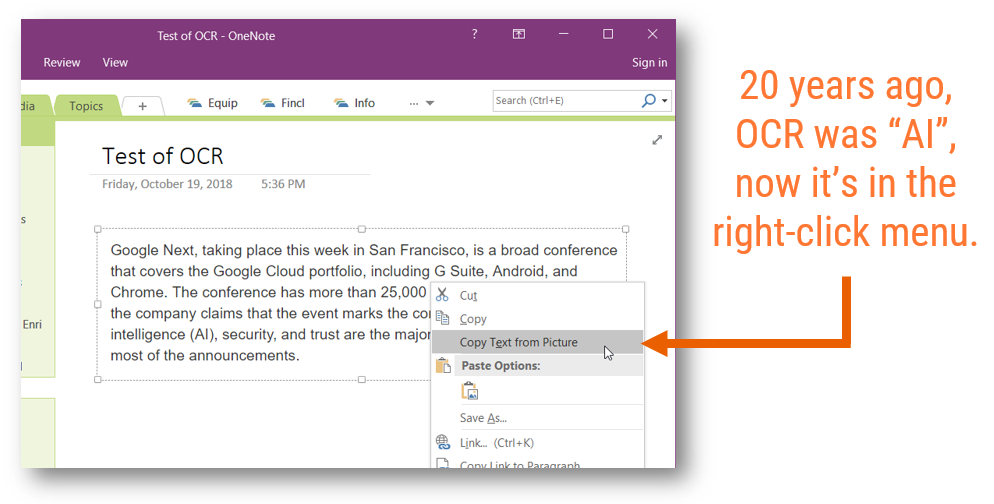

The core problem here is that AI is not a specific technology. The set of technologies that are included in the definition shifts with time. As a particular technology becomes mainstream, we stop thinking about it as “AI”.

Take, for example, handwriting recognition. Decades ago, the post office invested heavily in “AI” technology to automate letter sorting. Room-sized computers: A marvel in its time. But today, optical character recognition (OCR) is mundane. Here’s a screenshot of OneNote, where “extract text” is just a right-click menu item.

So, OCR is no longer AI.

What about speech recognition? We’re already at the point where it’s reached “good enough” status. Anyone can send audio to Google, AWS, or Nuance and get back text, at a cost of pennies-per-minute.

Someone once told me that “technology” refers to anything that didn’t exist when you were born. In 1950, anything electrical was “technology”. AI seems to follow that model, just with a shortened timeline.

Computers Can Do New Things

As much as we don’t want to be too exuberant, we also want to avoid the twin danger of wholesale dismissal. Clearly, something is happening. Computers have abilities they didn’t have before. Speech recognition took a big leap forward in recent years. Natural language processing (NLP) has gotten much better (being able to extract intent from messy English sentences). Computer vision is now capable of things that seemed impossible a few years ago: “Is there a pedestrian in this image?” or “Find purses that look like this one.”

It’s understandable that we want to put these advances into some bucket and label it “AI”, but that bucket is too broad to be meaningful. Plus, why is it that a computer doing an image search is “intelligent” but a computer doing a word search is not? Because image search was previously hard and now isn’t? That doesn’t jive with the meaning of “intelligent”.

A great mind-cleanser is this presentation from Andreessen Horowitz: “AI: What’s Working, What’s Not”.

And chase that down with this from Benedict Evans: How to Think about Machine Learning.

But … The S-Curve!

The bullish argument for AI is that we’re on the cusp of an explosion, that today’s headlines should be seen as hints that the set of mental tasks that computers can do is about to grow rapidly.

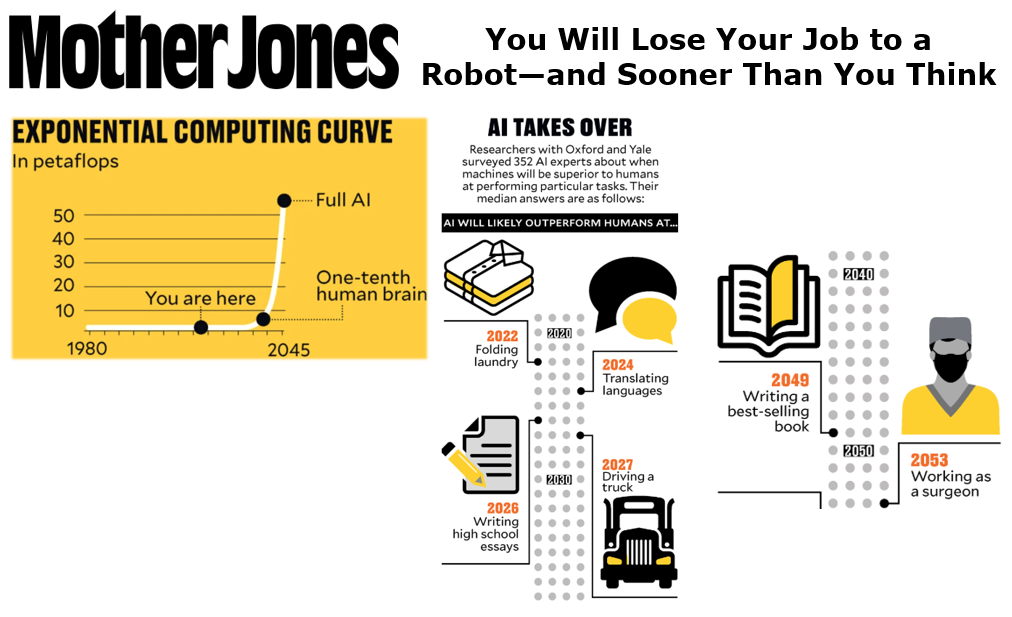

Then you end up with argle-bargle like this:

The problem with shouting “s-curve!” is that, at any particular moment, you don’t know if you’re standing at the start of the upswing or at the top of a short-term burst of improvement. It could be that 2018 was the last stop before the runaway train to the AI singularity. Or it could be that 2018 is where ASR and NLP became mundane and machine vision really hit primetime. And then, maybe … things get quiet for a while.

If we’re going to “steel-man” here, a great argument for the runaway train is from Tim Urban: The Road to SuperIntelligence.

A great counter to that is this piece by François Chollet: The Impossibility of Intelligence Explosion.

Effect on Customer Service and Call Centers

Two of the technologies that are currently in the “AI” bucket have a direct impact on customer service are ASR (speech recognition) and NLP (natural language processing). The latter is the key to making chat bots work.

There are definitely cases where these technologies are delivering real ROI, including the reduction the need for agents. Here’s a great example: Alight’s “Lisa” Empowers Self-Service Customer Decisions for HR Benefits Enrollment. Alight does outsourced for benefits administration and HR, serving 10 million people. They claim their new chatbot reduced live chat engagements by 67%.

Impressive results. I had a chance to ask the executive in charge of the project if this actually led to a reduction in head count. His answer was a qualified yes. You can hear his exchange with me in this video at 28:20.

But I don’t see this as evidence of the call center’s human-less future. Rather, I see successful chat-bot projects as extensions of our decades-long effort to optimize and improve self-service. It’s part of a continuum of web sites, smart speakers, mobile apps and IVR. In general, companies and consumers are both getting better at handling more interactions via self-serve.

On the other hand, more interactions are being done remotely (replacing in-person interactions). This, among other factors, may be why we continue to see both call centers and agent employment grow. That’s right: agent employment continues to go up. For more on that see No, Google’s Duplex is Not Going to Replace Call Centers and AI is Not Reducing Call Center Agent Employment.

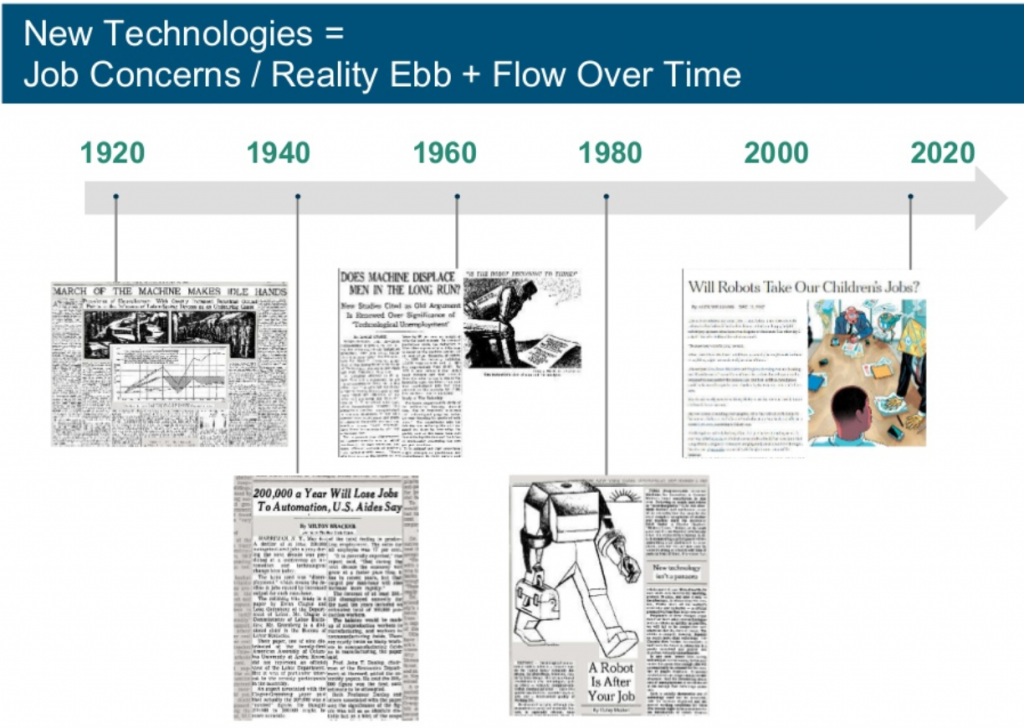

The fear that “the robots are taking our jobs” has a long history. See below (from Mary Meeker).

Last week we did a webinar on this topic. The on-demand version is here.

So … What to Do?

Should we all be more careful in how we talk about AI? Sure. But it’s a fool’s errand to tell founders: “Be less enthusiastic about your space!”. Boundless enthusiasm is basically a prerequisite for being a founder.

Investors want an easy story, so founders and executives say “AI”.

Analysts and journalists want an easy story, so marketers and publicists say “AI”.

We would all benefit if we starting referring to specific technology, rather than the ill-defined term “AI”. If you have a machine vision start-up, call it that. If your product uses speech recognition, say so. You can still say that with full enthusiasm and have more clarity as a bonus.

The Golden Rules of SLAs

If you’re looking to quickly study up on SLAs, look no further. Download this free white paper to become an expert.